TensorFlow

Basic Interview Q&A

1. What is TensorFlow?

TensorFlow is an open-source software library developed by Google for numerical computation and machine learning. It is designed to efficiently handle large-scale machine learning and deep learning tasks.

TensorFlow provides a flexible and comprehensive ecosystem of tools, libraries, and resources that allow developers to build and deploy machine learning models.

2. What are the primary features of TensorFlow?

The prominent features of TensorFlow are:

Scalability: TensorFlow can efficiently scale from a single device to multiple devices, including multi-GPU and multi-node (cluster) setups to allow developers to train and run complex ML models seamlessly.

Flexibility: TensorFlow supports various types of machine learning and deep learning models including CNNs, RNNs, and LSTMs.

Portability: TensorFlow runs on multiple platforms, such as CPUs, GPUs, and TPUs (Tensor Processing Units), and can be deployed on diverse systems like mobile devices, web browsers, and cloud platforms.

High-level APIs: TensorFlow offers high-level APIs, such as Keras, which provide an intuitive and user-friendly interface for building, training, and deploying models.

TensorBoard: TensorFlow includes a powerful visualization tool called TensorBoard which helps in debugging models, monitoring training progress, and visualizing computational graphs, model structures, and more.

3. Explain the concept of tensors in TensorFlow.

In TensorFlow, tensors are the fundamental data structures used to represent and manipulate data. The term "tensor" refers to a mathematical concept that generalizes vectors and matrices to higher dimensions.

Tensors are n-dimensional arrays (where n can be 0, 1, 2, or more) that contain elements of a single data type such as numbers (integers or floating-point values).

4. Explain the differences between TensorFlow and PyTorch.

TensorFlow and PyTorch are popular deep learning frameworks. TensorFlow emphasizes scalability and production deployment with a graph-based approach and a wide range of tools. PyTorch, on the other hand, prioritizes simplicity and flexibility, providing dynamic computation graphs and an intuitive interface.

TensorFlow has a larger ecosystem and supports more platforms, while PyTorch is favored for its ease of use and strong support for research.

5. What are the different types of tensors in TensorFlow?

TensorFlow supports a variety of tensor types:

tf.Variable: It's the most common tensor, and it's used to store mutable state. It's often used for weights and biases in machine learning models. Its values can be changed by running ops on it.

tf.constant: This is a tensor initialized with a constant value. Its values can't be changed once they're defined.

tf.placeholder: It is used to feed actual training examples. However, this is more common with TensorFlow v1.x, in TensorFlow 2.x, inputs are usually fed into the model via the fit method or the @tf.function decorator.

tf.SparseTensor: Represents a tensor with a lot of zeroes. Only non-zero values need to be stored which saves a lot of memory. It's used for representing sparse data like a large word vocabulary.

tf.RaggedTensor: Used for representing variable-length dimensions. It can handle different shapes and sizes.

tf.TensorArray: It is a data structure available in TensorFlow. It's a list of tensors. You can store tensors of different shapes and sizes.

tf.data.Dataset: Generally used for input pipelines. This is more of a high-level abstraction and technically not a 'tensor', but a collection of tensors.

6. Describe the TensorFlow execution model.

The TensorFlow execution model involves defining and executing computational graphs to perform operations on tensors. It can be divided into two parts:

Graph construction: In the TensorFlow execution model, computation is represented as a directed acyclic graph (DAG). Nodes in the graph correspond to operations (ops), while edges represent tensors that flow between these nodes. The graph construction phase involves creating tensors and defining operations that compose the graph. However, no actual computation is performed during this stage.

Graph execution: Once the computational graph has been defined, it needs to be executed in order to obtain the results of the computation. The execution engine takes care of executing the operations in the correct order, resolving dependencies, and optimizing the execution.

7. Mention the APIs used outside the TensorFlow.

TFLearn:

TFLearn provides a high-level API that enables neural network creation and training quickly and simply. TensorFlow is fully compatible with this API. Its API is denoted as tf.contrib.learn.

TensorLayer:

TensorLayer is a deep learning and reinforcement learning library built on TensorFlow. It is intended for scientists and engineers. It offers a large array of programmable neural layers/functions, which are essential for developing real-world AI applications.

PrettyTensor:

Pretty Tensor provides a high-level TensorFlow building API. It provides thin wrappers for Tensors, allowing you to easily design multi-layer neural networks.

Pretty Tensor is a collection of objects that behave like Tensors. It also includes a chainable object syntax for quickly defining neural networks and other layered architectures in TensorFlow.

Sonnet:

Sonnet is a TensorFlow-based framework for building complicated neural networks. It is a component of Google's DeepMind project, which employs a modular approach.

8. What is a TensorFlow graph?

A TensorFlow computational graph, or simply a TensorFlow graph, is a network of nodes, with each node representing the operation in a computation. Each operation, or op, could be as simple as addition or multiplication, or more complex, like some multivariate equation. The edges between the nodes represent the tensors that flow between operations.

Here's a simplified breakdown of the components of a TensorFlow computational graph:

Node: Represents a certain operation or a computation. Each node takes zero or more tensors as inputs and produces a tensor as an output.

Edges: These are the tensors. They carry the "output" value of an operation (or a node) to another node.

Operation: This is a function that takes in tensor(s), and it may also produce a tensor as output.

9. What is a TensorFlow session?

In TensorFlow, a Session is a class for running (TensorFlow) computational graphs. It encapsulates the environment in which Operation objects are executed, and Tensor objects are evaluated. It allocates resources (on one or more machines) and holds the actual values of intermediate results and variables.

Using a Session, you can execute operations in a context. This is at the core of TensorFlow's design: first, you describe and set up the graph, then these computations are executed with a Session.

10. What is TensorBoard and its uses?

ensorBoard is a collection of visual tools for inspecting and comprehending TensorFlow runs and graphs. It allows you to see the graphs and enhances graph accuracy and flow. It plots quantitative measures around the graph while also allowing other data, such as photos, to pass through it.

Here's what you can do with TensorBoard:

Visualize your Model: TensorBoard has a built-in visualizer, called a Graph Explorer, that visually represents your TensorFlow graph. This makes it easy to understand, debug, and optimize the program.

Monitor Metrics: TensorBoard allows tracking experiment metrics like loss and accuracy, comparing and visualizing these metric over time.

Histograms: It can visualize histograms of tensors over time. Histograms can show the changing distribution of variable or gradient values.

Data Projector: You can visualize high-dimensional data, embedding all your data in a 3D space so that you can intuitively understand your data.

TensorBoard Scalars: This allows you to track multiple runs, compare them, and visualize scalar information.

Image, Audio, and Text Views: TensorBoard can display image, audio, and text data, which is particularly useful for understanding and debugging neural networks dealing with such data.

Profiler: This helps in understanding the time and space complexities of your model, tracking where every millisecond is going.

11. What is TensorFlow serving?

TensorFlow Serving is a flexible, high-performance serving system developed by Google for machine learning models. It is designed for the production environment rather than the research environment, making it easy to deploy new algorithms and experiments while keeping the same server architecture and APIs. TensorFlow Serving provides out-of-the-box integration with TensorFlow models, but it can be easily extended to serve other types of models and data.

One of the primary benefits of TensorFlow Serving is its ability to handle model versioning. When you train a new version of a model, you simply tell TensorFlow Serving to start serving the new version, and it will begin to transition incoming inferencing requests over to the new version without any downtime. This system allows you to test new versions of models in a production environment and makes rolling back to a previous version straightforward if a problem arises with the new model.

12. What is the role of placeholders in TensorFlow?

In TensorFlow, placeholders serve as input nodes in the computational graph. They are used to feed input data into the graph during the execution phase. Placeholders allow for dynamic data input as their values can be assigned at runtime rather than being fixed during the graph construction.

13. What is an embedding projector?

You will often come across this tensorflow interview question. The Embedding Projector can display high-dimensional data. For example, after input data has been embedded in a high-dimensional space by model, it can be viewed. The model checkpoint file is read by the embedding projector. It can be customized with other metadata such as a vocabulary file or sprite pictures.

14. Explain TensorFlow variables and their importance.

TensorFlow variables are mutable, stateful objects that store and manage model parameters, such as weights and biases, in neural networks. They play a crucial role in machine learning models as they persist and allow updates to their values over training iterations.

Unlike tensors, which are immutable, TensorFlow variables are designed to store and modify the state of models. They allow models to learn from data, adjust their parameters, and capture complex relationships in the input data.

15. How do you create and initialize TensorFlow variables?

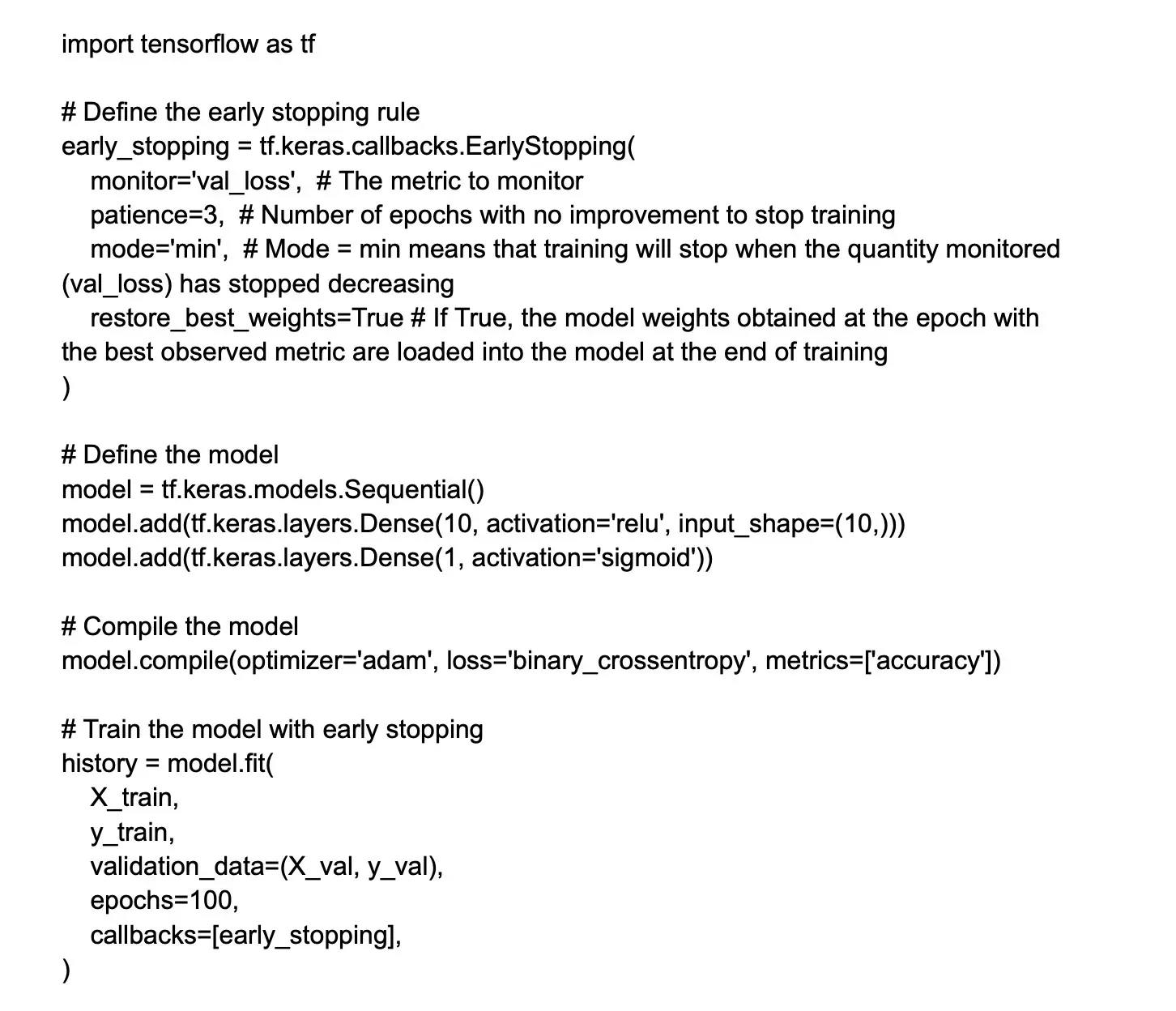

You can create TensorFlow variables using the tf.Variable function. To create a variable, you need to provide an initial value. This determines the shape and data type of the variable. Optionally, you can specify a name and other configurations.

Here's an example of creating and initializing a variable in TensorFlow 2.x:

In TensorFlow 2.x, with eager execution, variables are automatically initialized when they are created. In TensorFlow 1.x, however, you need to initialize variables explicitly before using them in a session.

16. What are the primary components of TensorFlow architecture?

The primary components of TensorFlow architecture are:

Servables: Servables are the central abstraction in TensorFlow Serving. They represent a deployed model that can be served to requests. They are loaded, served, and unloaded by the Servable Manager.

Loaders: Loaders manage the life cycle of a servable. They are responsible for loading a servable from a persistent store, initializing it, and unloading it when it is no longer needed.

Sources: Sources provide data to the servable. They can either be in-memory or on-disk sources.

Managers: Managers handle the overall management of TensorFlow Serving. They are responsible for starting and stopping the serving process as well as managing the resources used by TensorFlow Serving.

Core: The core of TensorFlow Serving is the TensorFlow Serving runtime. This is responsible for executing the servable's graph and returning the results of the computation.

17. What is the use of the tf.data module?

The tf.data module in TensorFlow is a powerful, comprehensive, and efficient library for creating input pipelines to preprocess, load, and transform data for ML models.

The module enables developers to work with complex and large-scale datasets by providing a high-level API to handle data in a consistent and optimized manner. The use of the tf.data module simplifies data manipulation and ensures efficient memory usage and performance during model training and evaluation.

18. Explain the concept of eager execution in TensorFlow.

Eager execution is a programming environment in TensorFlow that allows you to run your operations immediately and return concrete values instead of building a computational graph to run later. Introduced in TensorFlow 1.7, eager execution dramatically simplifies the TensorFlow model - from building a computational graph to directly executing operations in Python. It is akin to standard, idiomatic Python.

Below are some fundamental characteristics and benefits of eager execution:

Concrete Values: In eager execution, operations and computations return concrete values instead of computational graph entities. This makes it easier to keep track of variables and their values and improves debugging.

Simplified Flow: The flow of code execution is simplified, you don't have to maintain session objects or manually handle placeholders and feeds.

Dynamic Networks: Eager execution is compatible with Python control flow, which includes loops, conditional statements, recursion, etc. This makes it easier to build and use more complex and dynamic models.

Natural Python Integration: It enables more natural integration with Python, allowing you to use any Python data structures, libraries, and magic methods. You can write more idiomatic Python code.

Simplified Grad Function: The tf.GradientTape API enables automatic differentiation and gradient computation - useful for machine learning training.

Rapid Debugging: With eager execution, you can identity and debug errors immediately as the operation is evaluated on-the-fly. The use of standard Python debugging tools becomes possible.

19. Describe the TensorFlow API hierarchy.

TensorFlow offers a hierarchy of APIs that provide different levels of abstraction and complexity to cater to various developer needs. The hierarchy ranges from low-level to high-level, with higher levels built on top of the lower ones.

Low-level APIs: The low-level TensorFlow Core API provides the most flexibility in terms of building custom models and operations. However, working with it can be time-consuming and complex, particularly for beginners.

Mid-level APIs: Mid-level APIs enable developers to build input pipelines, process image and signal data, and handle other common machine learning tasks efficiently.

High-level APIs: TensorFlow's high-level APIs are designed to be more user-friendly and offer simplified developer experiences. These APIs include Keras (tf.keras) and Estimator (tf.estimator). They provide built-in support for standard layers and operations, simplifying the process of building, training, and evaluating models.

20. What are TensorFlow constants?

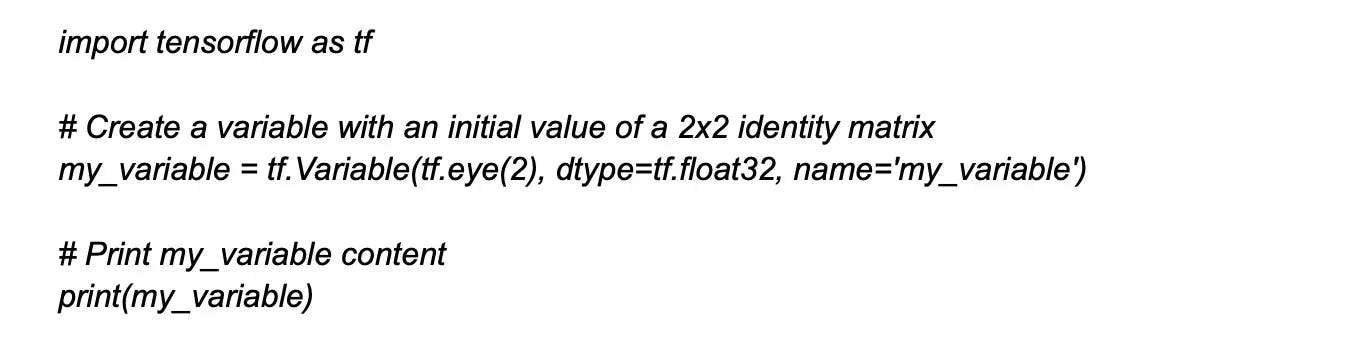

TensorFlow constants are tensors with immutable values that remain fixed during the entire computation. They are created using the tf.constant function which initializes the tensor with a specified value, shape, and data type.

Constants can be used in operations as input and can participate in the computation by flowing through the TensorFlow graph. However, their values cannot be changed during execution.

21. How to create TensorFlow constants?

To create TensorFlow constants, you can use the tf.constant() function provided by the TensorFlow library. Here's an example of the same:

In the above code, the tf.constant() function is used to create TensorFlow constants. You can pass different arguments to this function, such as scalar values, lists, matrices, data types, and shapes, to create constants of various types.

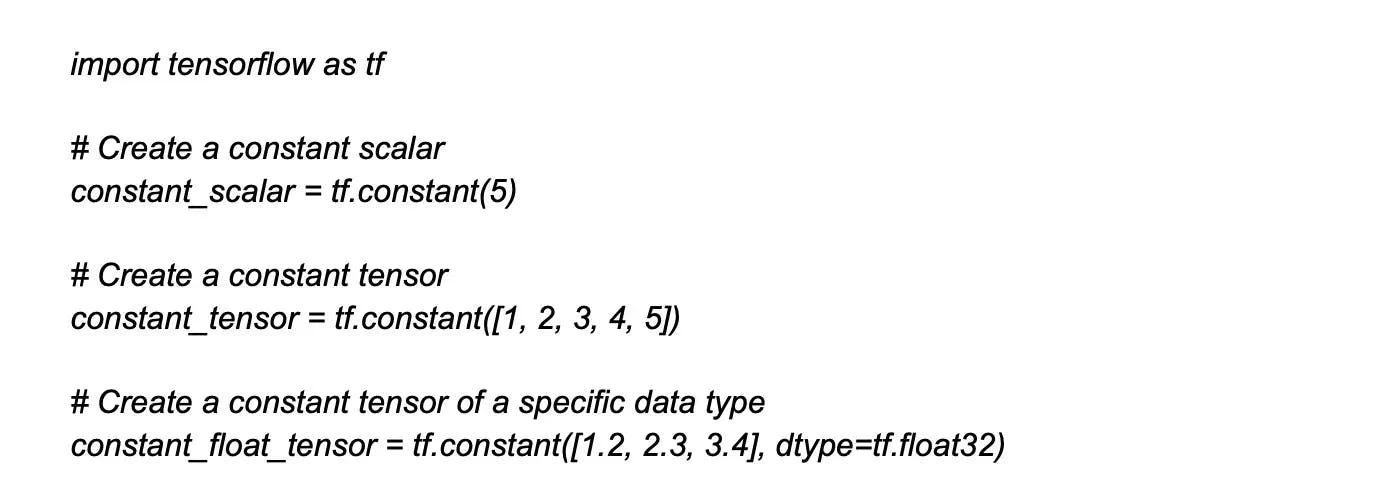

22. How do you define operations in TensorFlow?

In TensorFlow, operations (or ops) are defined as instances of the tf.Operation class. An operation represents a computational node in a TensorFlow graph, taking zero or more Tensor objects as input and producing zero or more Tensor objects as output. The operations can be as simple as addition or multiplication, or as complex as some custom computation.

The simplest way to create operations is by using TensorFlow's rich set of operators, functions and classes in a Python script. For example:

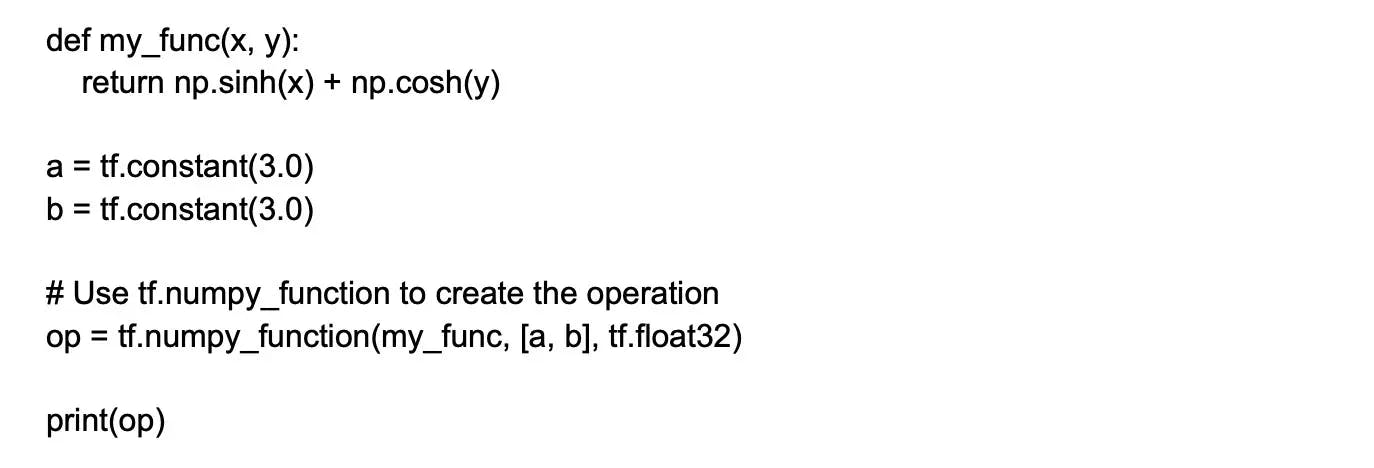

It is important to note that in TensorFlow 2.x, Eager execution is enabled by default and hence operations return values immediately. For defining more complex custom operations, you can use tf.py_function or tf.numpy_function.

Here is an example:

The operation op calls the Python function my_func whenever the operation is executed as part of a computation. Be aware, however, that defining custom operations in Python means they can't be executed in a non-Python environment that TensorFlow supports, such as a mobile device.

So, operations are usually defined as nodes in a computational graph, and TensorFlow offers a vast array of built-in operations, but also allows you to define custom operations for more complex needs.

23. What are the benefits of using TensorFlow for machine learning?

Here are some of the key benefits of using TensorFlow for machine learning:

Flexibility: TensorFlow provides a flexible architecture that allows users to define and build machine learning and deep learning models to accommodate diverse requirements.

Scalability: TensorFlow can scale from single-device prototyping to multi-device or even distributed training on large clusters. It supports various deployment scenarios including CPUs, GPUs, and TPUs.

Eager execution: TensorFlow 2.x introduced eager execution by default, enabling easier debugging and a more interactive development experience with immediate evaluation of operations and functions.

High-level APIs: TensorFlow offers high-level APIs, such as Keras, which simplifies the model-building process with an expressive and user-friendly interface.

Model deployment: TensorFlow offers TensorFlow Serving, TensorFlow Lite, and TensorFlow.js to serve models on different platforms including web browsers, cloud servers, and edge devices. This simplifies the deployment process and allows developers to reach a broader audience.

24. Explain the role of GPUs in TensorFlow computation.

GPUs (graphics processing units) play a significant role in accelerating TensorFlow computations for machine learning and deep learning applications. Compared to CPUs, GPUs have a parallel architecture with numerous cores which enables them to handle multiple computations simultaneously.

As a result, TensorFlow experiences considerable performance improvements when offloading workloads to GPUs, especially for tasks like training large neural networks and image processing.

25. How does automatic differentiation work in TensorFlow?

Automatic differentiation is a key technique used in TensorFlow and other deep learning frameworks to efficiently compute gradients of mathematical expressions with respect to their inputs. It plays a crucial role in training neural networks using methods like gradient descent.

TensorFlow utilizes a technique called ‘reverse-mode automatic differentiation’, which is also known as backpropagation. This technique allows for the efficient computation of gradients in computational graphs.

26. What is GradientTape in TensorFlow?

GradientTape is a mechanism provided by the TensorFlow API that enables automatic differentiation and gradient computation. It is a key component for computing gradients in TensorFlow models.

GradientTape is a context manager that records all operations executed within its scope, building a computational graph for automatic differentiation. When you want to compute gradients, you need to explicitly enter the GradientTape context using tf.GradientTape().

27. Describe basic TensorFlow mathematical operations.

TensorFlow supports basic arithmetic operations such as addition (tf.add()), subtraction (tf.subtract()), multiplication (tf.multiply()), division (tf.divide()), and exponentiation (tf.pow()).

These operations are applied element-wise to corresponding elements in the input tensors. TensorFlow also provides matrix multiplication, square root, and logarithm as a form of basic TensorFlow mathematical operations.

28. How can you visualize graphs in TensorFlow?

Visualizing computational graphs in TensorFlow can be done using TensorBoard, a built-in visualization and debugging tool. TensorBoard enables you to track various aspects of your TensorFlow model such as graph structures, metrics, and model weights.

29. What is TensorFlow Lite?

TensorFlow Lite is a lightweight framework developed by Google that is designed specifically for running machine learning models on resource-constrained devices like mobile devices, embedded systems, and IoT devices.

It is an extension of TensorFlow and provides tools and libraries to optimize and deploy machine learning models for edge computing scenarios. The primary goal of TensorFlow Lite is to enable efficient inference on devices with limited computational power and memory.

30. Define the tf.function.

tf.function is a decorator provided by the TensorFlow API that allows you to convert Python functions into TensorFlow graph functions. It is used to optimize the performance of TensorFlow code by leveraging the benefits of graph execution.

When you decorate a Python function with tf.function, TensorFlow traces the operations performed in that function and converts them into a graph representation. This graph is then optimized and can be executed efficiently, offering potential performance improvements over executing the same operations in a purely imperative manner.

31. Explain the purpose of TensorFlow Datasets.

TensorFlow Datasets (TFDS) is a collection of high-quality, ready-to-use datasets with easy-to-use APIs. It aims to simplify and streamline the process of loading, preprocessing, and fetching datasets for machine learning and deep learning projects when using TensorFlow.

It helps researchers and practitioners focus on their models and training processes, rather than dealing with the time-consuming, challenging tasks of acquiring, cleaning, and processing raw data.

32. What role do tf.SavedModel & tf.Checkpoint play in model management?

tf.SavedModel and tf.Checkpoint are TensorFlow mechanisms for model management, particularly in the context of saving and loading model weights during training, evaluation, and deployment.

tf.SavedModel is a serialized format for TensorFlow models that stores both the model architecture (the computation graph) and the model's trained parameters (weights and biases). Its primary use is to save a model and its weights in a way that can be reloaded and restored for a variety of tasks.

tf.Checkpoint is a lightweight format for TensorFlow, specifically focused on saving and managing the model's internal state during training like model weights and optimizer states. Unlike tf.SavedModel, it does not save the model architecture but can be used in combination with other saving strategies.

33. What are the popular applications of TensorFlow in machine learning and deep learning?

TensorFlow is used extensively for a wide range of machine learning and deep learning applications such as:

Image classification: TensorFlow is widely used to train and deploy convolutional neural networks (CNNs) to classify images into different categories.

Natural language processing (NLP): TensorFlow is extensively used in NLP tasks such as sentiment analysis, named entity recognition (NER), question-answering, and machine translation.

Text and speech generation: With TensorFlow, you can develop models for seq2seq tasks like generating text or creating chatbots as well as text-to-speech and speech-to-text applications.

Anomaly detection: TensorFlow can be used to build models to detect anomalous data points or patterns in various domains such as finance, cybersecurity, and healthcare.

Recommendation systems: TensorFlow is widely used to develop recommendation systems to suggest personalized content like movies, music, or products to users.

34. How do you install TensorFlow on your system?

To install TensorFlow, follow these general steps:

Check system requirements: Ensure that your system meets the requirements for installing TensorFlow. It supports various platforms including Linux, macOS, Windows, and different versions of Python.

Create a virtual environment (optional): It is recommended to create a virtual environment to isolate the TensorFlow installation from your system's Python environment. This step is optional but can help manage dependencies and avoid conflicts with other packages.

Install TensorFlow: There are multiple ways to install TensorFlow, depending on your preferences and requirements.

Installation using conda: If you are using conda as your package manager, you can create and activate a conda environment and install TensorFlow using the following command:

conda install tensorflow

For GPU support with conda, you can use the command:

conda install tensorflow-gpu

Verify the installation: After the installation completes, verify that TensorFlow is installed correctly by importing it in a Python interpreter or a Python script and checking for any error messages.

35. How do you load a dataset using TensorFlow?

To load a dataset using TensorFlow, you can use the tensorflow_datasets (TFDS) package which provides a range of curated datasets for various machine learning tasks.

First, install TFDS using pip install tensorflow-datasets. In your code, import the package and use the tfds.load() function to fetch the data. This function takes the dataset name and optionally, the split (like 'train' or 'test') and data preprocessing functions.

The loaded data is returned as a tf.data.Dataset object which can be easily used for training and evaluating machine learning models with TensorFlow.

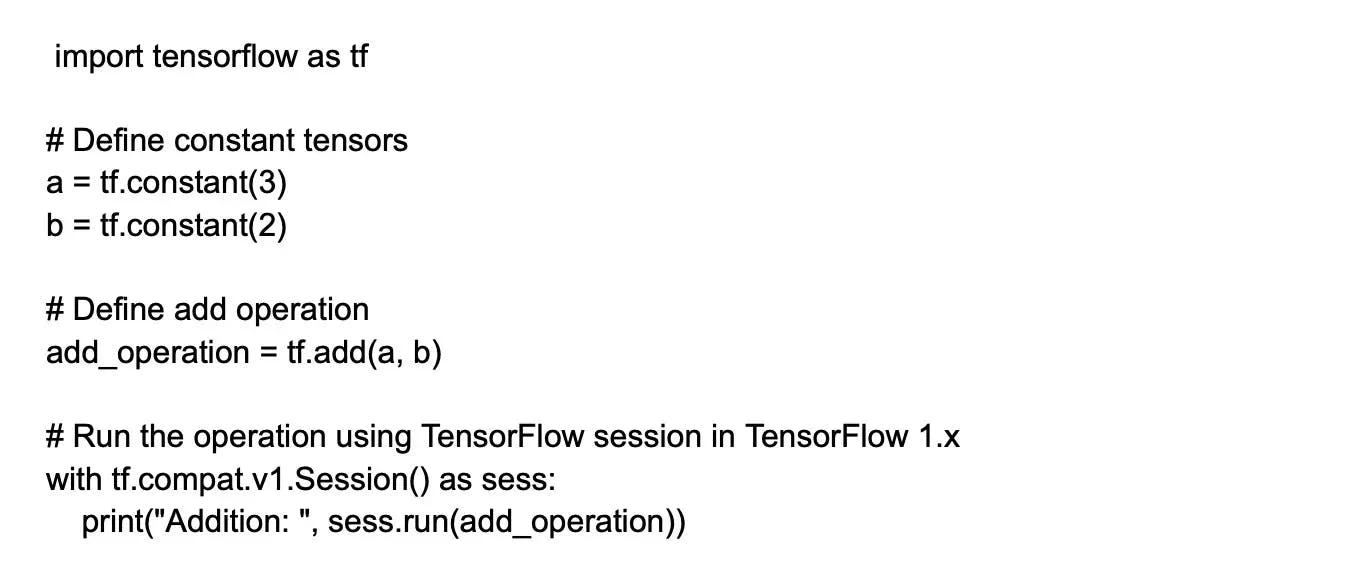

36. What is a TensorFlow optimizer?

A TensorFlow optimizer is an essential component in the process of training a machine learning or deep learning model. It is responsible for updating the model's weights and biases to minimize the loss function.

Optimizers use various optimization algorithms like Gradient Descent, Adam, RMSprop, and AdaGrad to improve the model's performance iteratively over time.

These algorithms compute the gradient of the loss function with respect to each weight by using techniques like backpropagation and adjust the weights accordingly. In TensorFlow, optimizers are available under the tf.keras.optimizers module and can be easily integrated into your training process.

37. How can you save and restore a TensorFlow model?

To save and restore a TensorFlow model, you can use the tf.keras.Model.save() method and the tf.keras.models.load_model() function. The save() method stores the model architecture, weights, and optimizer states in the tf.SavedModel format, which can be easily loaded and reused.

When you want to save your trained model, call:

model.save('path/to/saved_model/').

When you need to restore the model, call:

restored_model = tf.keras.models.load_model('path/to/saved_model/').

The restored_model can be used for further training, evaluation, or deployment just like the original model.

Wrapping up

This comprehensive collection of the latest and the most relevant TensorFlow interview questions can help both recruiting managers and developers. It can help the former evaluate candidates for their technical proficiency in TensorFlow, while it can assist the latter prepare for an interview.

If you’re a recruiting manager looking to simplify the hiring process, you can join Turing to hire the world’s best TensorFlow developers, who are rigorously vetted by AI, on the cloud.

If you are a developer proficient in TensorFlow, you can apply to the latest TensorFlow jobs at Turing and enjoy a wealth of benefits including high-paying jobs, career growth, and developer success support.

Hire Silicon Valley-caliber TensorFlow developers at half the cost

Turing helps companies match with top quality remote JavaScript developers from across the world in a matter of days. Scale your engineering team with pre-vetted JavaScript developers at the push of a buttton.

Tired of interviewing candidates to find the best developers?

Hire top vetted developers within 4 days.

Leading enterprises, startups, and more have trusted Turing

Check out more interview questions

Hire remote developers

Tell us the skills you need and we'll find the best developer for you in days, not weeks.