Google Cloud

Basic Interview Q&A

1. What are the many levels of cloud architecture?

The following are the many layers of cloud architecture:

- Physical Layer: This layer contains the network, physical servers, and other components.

- Infrastructure layer: This layer includes virtualized storage levels, among other things.

- Platform layer: This layer consists of the applications, operating systems, and other components.

- Application layer: It is the layer with which the end-user interacts directly.

2. How would you define VPC?

VPC is an abbreviation for Virtual Private Cloud. It is a virtual network that connects to Google Kubernetes Engine clusters, compute Engine VM instances and various other services. The VPC provides a great deal of control over how workloads connect globally or regionally. A single VPC can serve multiple regions without relying on the Internet.

3. What libraries and tools are available for GCP cloud storage?

JSON and XML APIs are fundamental to Google Cloud Platform cloud storage. In addition to this, Google provides the following tools for interfacing with cloud storage.

Google Cloud Platform Console- It's a collection of cloud computing services that run on the same infrastructure as Google's end-user products including Google Search, Gmail, Google Drive, and YouTube. GCP Console offers a myriad of modular cloud services, including computing, data storage, data analytics, and machine learning, in addition to a set of management tools. A credit card or bank account number is required to register for GCP Console.

Cloud Storage Client Libraries- Google Cloud Storage enables you to store data on Google's infrastructure with high reliability, performance, and availability, and it may also be used to deliver huge data items to consumers via direct download.

Gustil Command-line Tool- It's a Python program that enables you to use the command line to access Cloud Storage. gsutil can be used to do a variety of bucket and object management operations, such as creating and deleting buckets. Objects can be uploaded, downloaded, and deleted.

4. What is a Google Cloud API? How did we get our hands on it?

Google Cloud APIs are programmatic interfaces that allow users to add power to everything from storage access to machine-learning-based image analytics to Google Cloud-based applications.

Cloud APIs are simple to use with client libraries and server applications. The Google Cloud API is accessible via a number of programming languages. Firebase SDKs or third-party clients can be utilized to build mobile applications. Google SDK command-line tools or the Google Cloud Platform Console Web UI can be used to access Google Cloud APIs.

5. What exactly is a bucket in Google Cloud Storage?

Buckets are the main containers for storing data. We may arrange the data and provide access to the control by using buckets. The bucket has a globally unique name and a geographic location where the material is kept. A default storage class is offered, which is applied to items that are added to the bucket but do not have a specified storage class. There is no limit to the number of buckets that can be added or removed.

6. Define Object Versioning.

Object versioning is used to recover objects that have been overwritten or erased. Object versioning raises storage costs, but it assures that objects are secure when replaced or removed. When the GCP bucket's object versioning is enabled, a non-common version of the object is created whenever the object is deleted or overwritten. The properties generation and meta generation are used to identify a version of an item. Meta generation acknowledges metadata generation, whereas generation recognizes content generation.

7. What is serverless computing?

Serverless computing refers to the practice of offering backend services on a per-use basis. Although servers are still utilized, a company that uses serverless backend services is charged based on consumption rather than a fixed amount of bandwidth or number of servers.

The cloud service provider will have a server in the cloud that operates and handles resource allocation dynamically in Serverless computing. The supplier provides the infrastructure required for the user to function without worrying about the hardware. Users must pay for the resources that they utilize. It will streamline the code deployment process while removing all maintenance and scalability difficulties for users. It's a subset of utility computing.

8. On-demand functionality is provided by cloud computing in what way?

Cloud computing as technology was designed to give functionality to all on-demand users at any time and from any location. It has achieved this goal with subsequent advancements and simplicity of application availability, such as Google Cloud. A Google Cloud user will be able to access their files in the cloud at any time, on any device, from any location as long as they are connected to the Internet.

9. What is the connection between Google Compute Engine and Google App Engine?

Google App Engine and Google Compute Engine complement one another. Google Application Engine is a Platform-as-a-service (PaaS), whereas GCE is an Infrastructure-as-a-service (IaaS). GAE is commonly used to power mobile backends, web-based apps, and line-of-business applications. If we require additional control over the underlying infrastructure, Google Compute Engine is an excellent choice. GCE, for example, can be utilized to create bespoke business logic or to run our own storage solution.

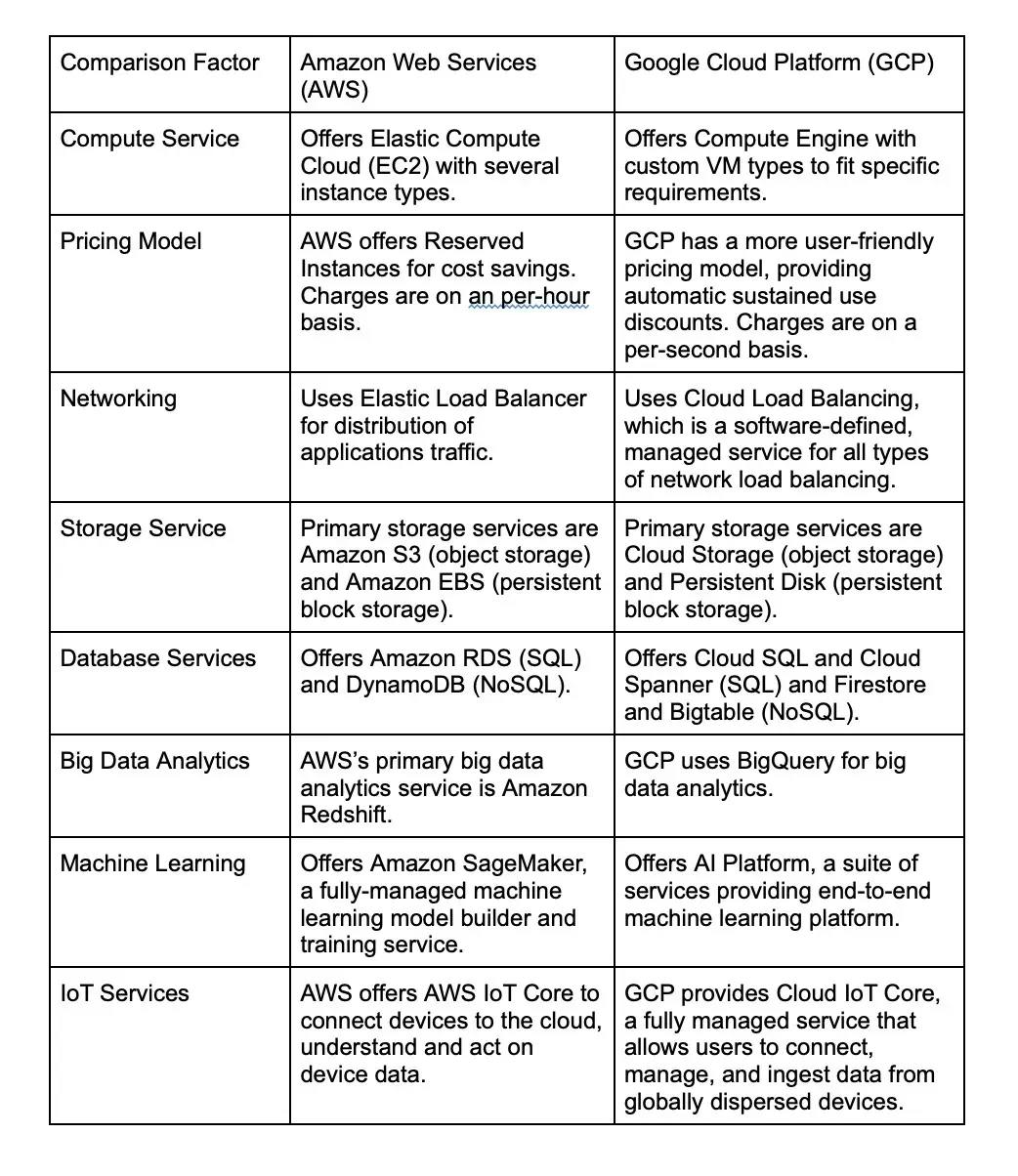

10. What is Google Cloud Platform (GCP)?

Google Cloud Platform is a suite of cloud computing services provided by Google, which includes a wide range of services such as infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS).

GCP provides a scalable and secure cloud computing environment for businesses and organizations of all sizes. It allows users to deploy and run applications, store and analyze data, and build machine learning models, among other functionalities. It offers a wide range of services, including computing, storage, databases, analytics, machine learning, security, and networking.

Also read: LaaS vs PaaS vs SaaS

11. What services does GCP provide?

Google Cloud Platform (GCP) provides a wide range of services. Here are some categorized under different domains:

Compute:

- Google Compute Engine (Virtual Machines)

- Google Kubernetes Engine (Container-based applications)

Storage & Databases:

- Google Cloud Storage

- Cloud SQL

- Firestore

Networking:

- Google Virtual Private Cloud (VPC)

- Cloud Load Balancing

Big Data:

- BigQuery

- Cloud Dataflow

Machine Learning:

- Google AI platform

- AutoML

Identity & Security:

- Cloud Identity and Access Management (IAM)

- Cloud Identity-Aware Proxy

12. What are the benefits of using GCP?

Google Cloud Platform (GCP) boasts several advantages that make it a competitive choice amongst other cloud providers. Here are some of the benefits:

Powerful Data Analytics and Machine Learning: GCP provides robust data analytics and machine learning services that benefit from Google's pioneering work in these areas. Tools like BigQuery for data warehousing, Cloud Machine Learning Engine, and built-in AI services can provide businesses with powerful insights.

Google's Infrastructure: GCP users benefit from Google's global, high-speed network, ensuring fast and reliable access to their data and services.

Security: GCP uses the same security model that Google employs for its services like Search, Gmail etc. Hence, GCP customers can ensure their data is protected by Google’s robust security protocols.

Cost-Effective and Customizable Pricing: GCP's pricing model is often more flexible compared to other giants like AWS or Azure, with many services billed per second as opposed to per hour. It also offers committed use contracts where prices can be heavily discounted if you commit to using a certain product over a certain period.

Sustainability: Google's commitment to achieving 100% renewable energy usage for its global operations can be beneficial to organizations focusing on sustainability.

Live Migration of Virtual Machines: Google Cloud is one of the few providers that offer live migration of virtual machines. This feature enables proactive maintenance and mitigates the impact of downtime.

13. What are the pricing models for GCP?

Google Cloud Platform (GCP) offers a flexible and transparent pricing structure designed to fit different needs and budgets. The specific prices for various services can depend on a variety of factors, from the types of VMs being used to where the data is stored geographically. Here are some of the key components of its pricing model:

Pay-As-You-Go: This is the default pricing model for GCP. Customers pay for what they use with no up-front costs. Billing is on a per-second basis for many services, providing a high level of granularity and cost control.

Sustained Use Discounts: For services such as Compute Engine and Cloud SQL, GCP automatically gives discounts when a virtual machine (VM) is used for a significant portion of the billing month. The discount increases with usage, up to 30%.

Always Free Tier: GCP also offers an always-free tier for many of its services, which allows users to use these services up to specific limits without any cost. This is great for small-scale projects or developers testing out different services.

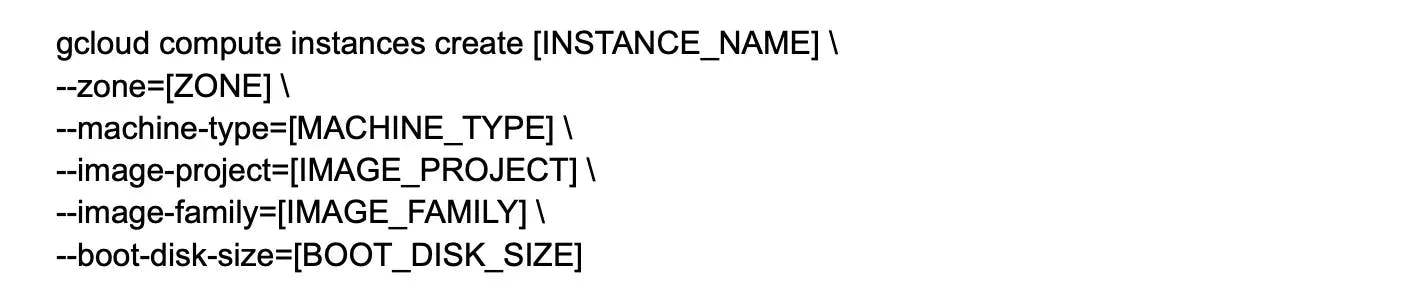

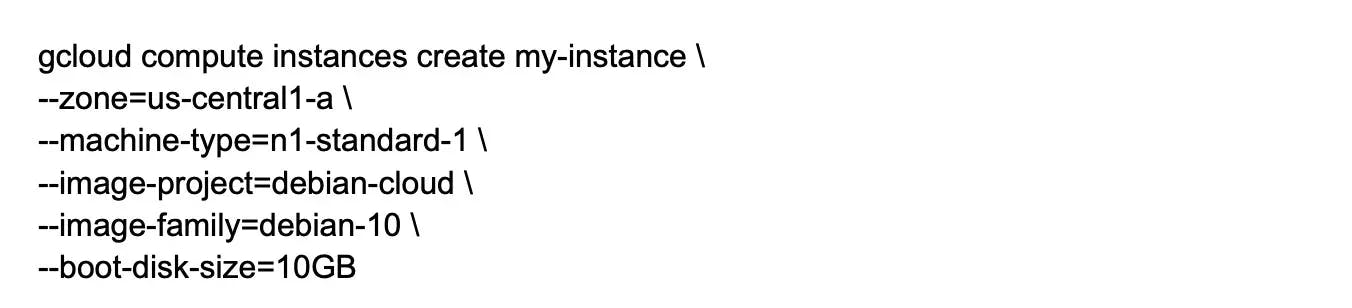

14. What is a Compute Engine instance?

A Compute Engine instance is a virtual machine (VM) provided by GCP that allows users to run applications and services on the cloud. They can customize the VM's specifications, including CPU, memory, and storage, and choose from a wide range of operating systems and pre-configured images to create their instances.

15. What is Cloud Storage?

Cloud Storage is a service provided by GCP that allows users to store and retrieve data on the cloud. It can store any kind of data, including objects, files, and media, in a highly scalable and durable storage system. Cloud Storage also provides various features, such as data encryption and access control, to ensure data security.

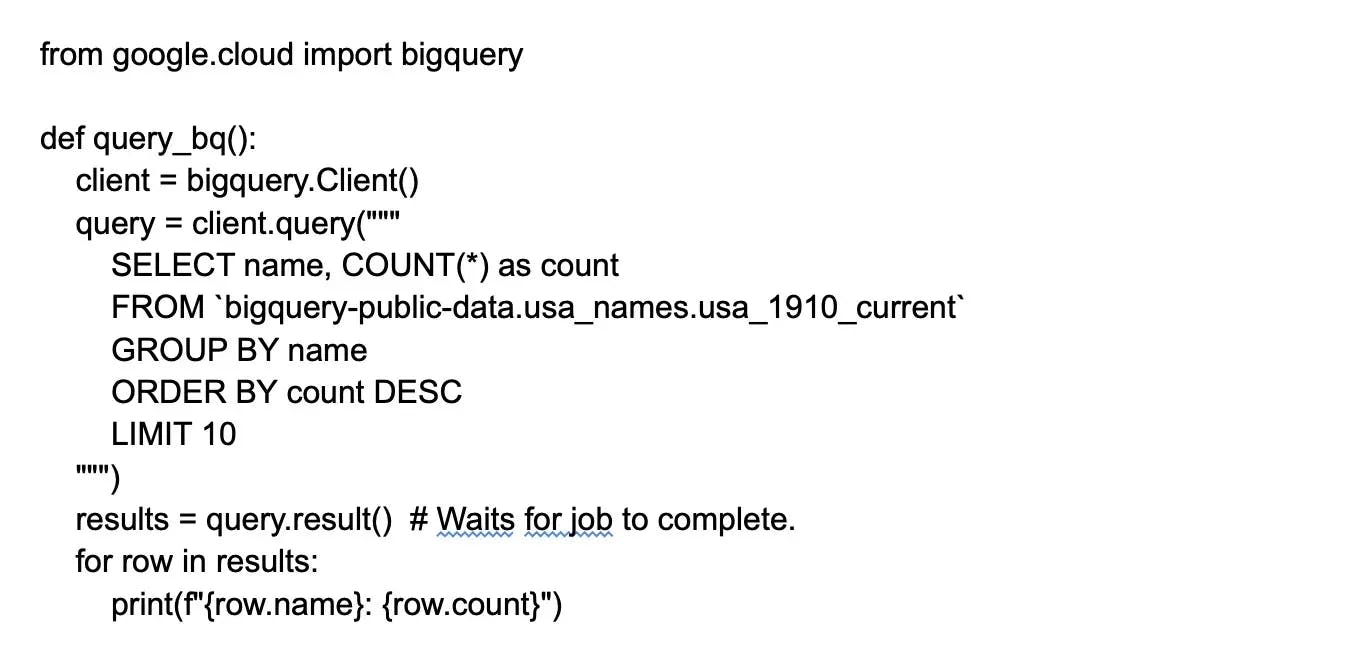

16. What is BigQuery?

BigQuery is a fully managed data warehouse and analytics platform provided by GCP that allows users to analyze large datasets quickly and interactively. They can use SQL-like queries to retrieve data from multiple sources and analyze it in real-time using features such as data visualization and machine learning.

17. What is App Engine?

App Engine is a platform as a service (PaaS) provided by GCP that allows users to develop and deploy web and mobile applications on the cloud. It provides a fully managed and scalable environment, allowing users to focus on writing code rather than managing infrastructure. It supports several programming languages, frameworks, and libraries.

18. What is Cloud Datastore?

Cloud Datastore is a NoSQL document database provided by GCP that allows users to store, retrieve, and query data on the cloud. It is fully managed, highly scalable, and can handle semi-structured data. It provides features such as ACID transactions, indexes, and automatic scaling, making it easy to develop and deploy applications.

19. What is Cloud SQL?

Cloud SQL is a fully managed relational database service provided by GCP that allows users to host and manage MySQL, PostgreSQL, and SQL Server databases on the cloud. It provides features like automatic backups, replication, and high availability that make it easy to build and maintain databases on the cloud.

20. What is Cloud Spanner?

Cloud Spanner is a fully managed relational database service that allows users to horizontally scale their databases globally, ensuring high availability and consistency. It offers features like ACID transactions, automatic sharding, and automatic replication, which simplify the process of building and maintaining high-scale, mission-critical databases on the cloud.

21. What is Cloud Bigtable?

Cloud Bigtable is a fully managed, highly scalable NoSQL database service designed for large-scale and high-performance workloads, such as real-time analytics and time-series data. It offers automatic scaling, high availability, and integration with popular big data tools.

22. What is Cloud Pub/Sub?

Cloud Pub/Sub is a messaging service by GCP that enables real-time and asynchronous communication between applications and services. It allows decoupling of publishers and subscribers, which ensures high availability and scalability, and provides reliable and secure delivery of messages through a publish-subscribe model.

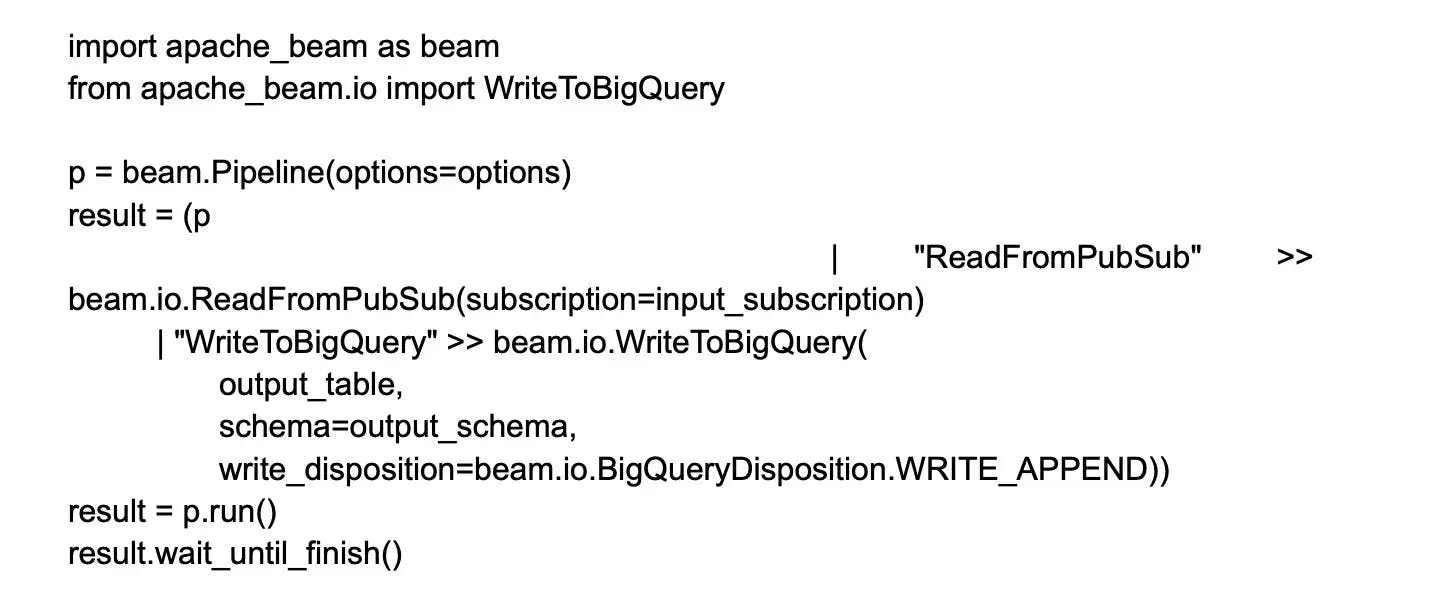

23. What is Cloud Dataflow?

Cloud Dataflow is a fully managed, serverless data processing service by Google Cloud Platform. It enables users to develop and execute data processing pipelines for batch and stream processing in a highly scalable and fault-tolerant environment. It offers a simple programming model and supports popular data sources and sinks.

24. What is Cloud Dataproc?

Cloud Dataproc is a fully managed, serverless data processing service that allows users to easily create and manage Apache Hadoop, Apache Spark, and other big data clusters. It provides a highly scalable, performant, and cost-effective environment for running data processing workloads. It also integrates with other GCP services.

25. What is Cloud Machine Learning Engine?

Cloud Machine Learning Engine (CMLE) is a managed service by GCP that enables users to build and deploy machine learning models at scale. It simplifies the process of training and deploying machine learning models by handling the underlying infrastructure and providing a set of tools and APIs.

26. What is Cloud Composer?

Cloud Composer is a fully-managed workflow orchestration service from Google Cloud. It enables users to author, schedule, and monitor multi-step data pipelines using popular open-source tools such as Apache Airflow. With Cloud Composer, users can create and manage complex workflows that integrate with other cloud services, making it easier to build scalable and reliable data pipelines in the cloud.

27. What is Cloud Functions?

Cloud Functions is a serverless computing service provided by cloud platforms like Google Cloud, AWS, and Microsoft Azure. It allows developers to write and deploy code in response to events or HTTP requests without the need to manage infrastructure. It scales automatically, making it ideal for building event-driven and microservices-based applications in the cloud.

28. What is Cloud Load Balancing?

Cloud Load Balancing is a service provided by cloud platforms like Google Cloud, AWS, and Microsoft Azure. It distributes incoming traffic across multiple instances or services which optimizes availability and performance. It can automatically scale resources up or down based on traffic, and can also perform health checks and failover between instances to ensure high availability.

29. What is Cloud DNS?

Cloud DNS is a scalable and highly available Domain Name System (DNS) service offered by cloud platforms like Google Cloud, AWS, and Microsoft Azure. It allows users to publish and manage their domain names with low latency, high availability, and automatic DNS record synchronization across the globe. It also provides advanced features like DNSSEC and Anycast networking.

30. What is Cloud CDN?

Cloud CDN is a content delivery network service provided by cloud platforms. It caches content at edge locations worldwide, reducing latency and improving performance for end-users. Cloud CDN also provides advanced features such as SSL/TLS encryption, HTTP/2 support, and real-time logs and metrics.

31. What is Cloud Interconnect?

Cloud Interconnect is a service provided by cloud platforms such as Google Cloud, AWS, and Microsoft Azure that enables users to connect their on-premises infrastructure to cloud services using dedicated and low-latency connections.

It provides private and secure connectivity options like VPN, Direct Peering, and Dedicated Interconnect which lets users extend their networks to the cloud with high bandwidth and reliability.

32. What is Cloud VPN?

Cloud VPN is a service offered by cloud platforms such as Google Cloud, AWS, and Microsoft Azure. It allows users to securely connect their on-premises network to cloud services using a Virtual Private Network (VPN). It provides encrypted and authenticated connections over the public internet to enable users to extend their networks to the cloud with high security and reliability.

33. What is Cloud Security Scanner?

Cloud Security Scanner is a Google Cloud web application security scanner. It enables users to identify security vulnerabilities in their web applications by crawling and testing them for common issues such as cross-site scripting (XSS), mixed content, and outdated libraries. Cloud Security Scanner can be integrated into continuous integration and continuous deployment (CI/CD) pipelines, making it easier to automate web application security testing in the cloud.

34. What is Cloud IAM?

Cloud Identity and Access Management (Cloud IAM) is a feature of Google Cloud Platform (GCP) that allows you to manage access control by defining who (identity) has what access (role) for which resource.

One of the main advantages of Cloud IAM is that it provides unified permission management across all GCP services. This means that you can centrally manage permissions for all services in one location, providing consistent and comprehensive access control.

35. What is Cloud Resource Manager?

Cloud Resource Manager is a Google Cloud service that enables users to manage and organize their cloud resources across projects and folders. It provides a hierarchical view of resources, allowing users to set policies, budgets, and permissions at different levels. It also provides APIs and SDKs to automate resource management tasks which make it easier to scale and optimize cloud usage.

36. What is Cloud Monitoring?

Cloud Monitoring is a service offered by various cloud platforms such as Google Cloud, AWS, and Microsoft Azure. It enables users to monitor the performance, availability, and health of their cloud resources and applications. It provides real-time metrics, logs, and alerts, allowing users to troubleshoot and optimize cloud deployments. Cloud Monitoring also integrates with other cloud services, such as Cloud Logging and Cloud Trace, providing a unified view of the cloud environment.

37. What is Cloud Logging?

Cloud Logging is a service offered by cloud platforms like Google Cloud, AWS, and Microsoft Azure that enables users to store, search, and analyze logs from their cloud resources and applications. It provides real-time and historical insights into system events, errors, and performance, allowing users to troubleshoot issues and debug their cloud deployments.

Cloud Logging integrates with other cloud services, such as Cloud Monitoring and Cloud Trace, which provides a unified view of the cloud environment.

38. What is Cloud Debugger?

Cloud Debugger is a debugging service provided by cloud platforms like Google Cloud, AWS, and Microsoft Azure. It enables users to debug their cloud applications without stopping or restarting them.

Cloud Debugger provides a snapshot of the application's state at any point in time, allowing users to inspect and analyze the code, variables, and call stack. It also supports debugging in production environments which makes it easier to troubleshoot issues in real-time.

39. What is Cloud Trace?

Cloud Trace is a distributed tracing service offered by several cloud platforms including Google Cloud, AWS, and Microsoft Azure. It allows users to monitor and optimize the performance of their cloud applications. It provides end-to-end visibility into application latency and behavior, allowing users to identify bottlenecks and optimize resource utilization.

Cloud Trace integrates with cloud services, such as Cloud Logging and Cloud Monitoring, to provide a unified view of the cloud environment.

40. What is Cloud Storage Transfer Service?

Cloud Storage Transfer Service is a data transfer service by Google Cloud that enables users to transfer data from on-premises or other cloud storage systems to Google Cloud Storage. It supports transfers of large volumes of data, with scheduling and automation options, allowing users to migrate or backup their data to the cloud with ease.

Cloud Storage Transfer Service also provides validation and error handling capabilities that ensure the integrity of the transferred data.

41. How can you use Google Cloud Platform to implement serverless APIs using Cloud Endpoints?

Google Cloud Platform provides a serverless API management solution called Cloud Endpoints. It enables you to create, deploy, and manage APIs that are secure, scalable, and highly available.

You can use open standards like OpenAPI and gRPC to define your API contracts, and automatically generate client libraries and documentation. Cloud Endpoints also integrates with popular GCP services like Cloud Functions, App Engine, and Compute Engine, making it easy to deploy your APIs in a serverless or containerized environment.

42. What is Cloud Deployment Manager?

Cloud Deployment Manager is a Google Cloud service that enables users to create, deploy, and manage cloud resources using templates and configuration files. It provides a declarative approach to infrastructure deployment, allowing users to define their desired state and automate the provisioning and configuration of cloud resources.

Cloud Deployment Manager supports a wide range of Google Cloud services and integrates with other cloud services like Cloud Build and Cloud Monitoring.

43. What are the best practices for using Google Cloud Platform?

Some best practices for using Google Cloud Platform include:

Security: Apply the principle of least privilege with Cloud IAM, encrypt data at rest and in transit, protect service accounts, and use Cloud Logging and Cloud Monitoring for threat detection.

Operational Excellence: Use automation tools like Cloud Deployment Manager for resource management, use CI/CD tools for application deployment, and use automatic scaling based on load.

Performance Efficiency: Casually situate your resources close to customers to reduce latency, choose the correct machine types considering CPU and memory needs, and leverage managed services for database workloads.

Cost Optimization: Make use of GCP's pricing tools like the pricing calculator and detailed billing report, take advantage of committed use contracts or sustained use discounts for Compute Engine instances, and set up budget alerts

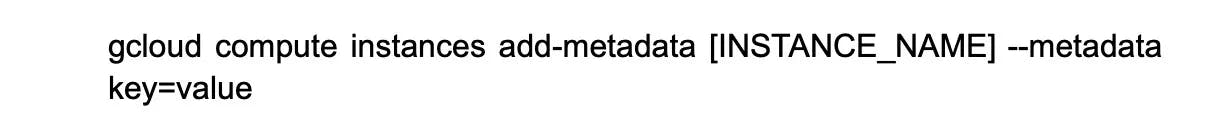

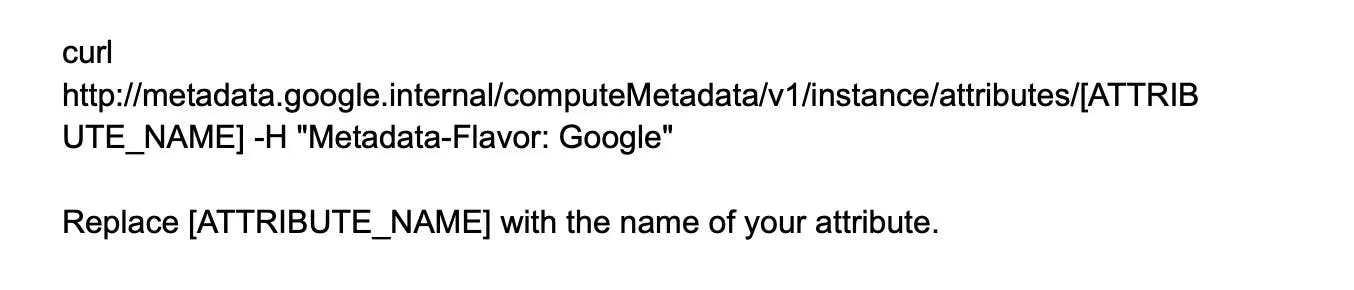

44. What is Google Cloud Shell?

Google Cloud Shell is a browser-based command-line interface (CLI) provided by Google Cloud that enables users to manage their Google Cloud Platform resources from anywhere with an internet connection. It provides a pre-configured environment with popular tools and SDKs, allowing users to easily access and manage their cloud resources using CLI commands.

Google Cloud Shell also supports file editing, version control, and customization, making it a powerful tool for cloud development and administration.

45. What is Cloud Console?

Cloud Console is a web-based management console provided by cloud platforms like Google Cloud, AWS, and Microsoft Azure that enables users to manage their cloud resources and services. It has a user-friendly interface to view, configure, and monitor cloud services and provides access to documentation, billing, and support.

Cloud Console supports role-based access control, allowing users to grant access to specific resources and services based on their roles and permissions.

46. What is Cloud SDK?

Cloud SDK is a set of command-line tools provided by cloud platforms like GCP, AWS, and Microsoft Azure that enable users to manage their cloud resources and services. It offers a convenient way to interact with cloud services using CLI commands, scripts, and automation as well as access to development and testing tools.

Cloud SDK includes tools for authentication, logging, debugging, and deployment, making it a powerful tool for cloud development and administration.

47. What is Cloud Launcher?

Cloud Launcher is a marketplace of pre-configured virtual machine images and software packages provided by cloud platforms that lets users easily deploy and manage their cloud applications.

It offers a wide range of popular software packages and solutions, including databases, web servers, and content management systems. These allow users to quickly set up and run their applications on the cloud. It also provides integration with other cloud services such as Cloud Monitoring and Cloud Storage.

48. What is the Google Cloud Platform Marketplace?

The Google Cloud Platform Marketplace is an online marketplace for third-party software and services that are tested, verified, and optimized to run on GCP. It offers software packages and solutions, including databases, web servers, and machine learning tools, that allow users to easily deploy and manage their cloud applications. It also provides integration with other GCP services like Cloud Storage and Cloud Logging.

49. Explain the different Google Cloud Platform services for analytics?

Google Cloud Platform (GCP) offers a broad suite of analytics services that cater to a variety of use cases, ranging from automating routine tasks to performing advanced analytics. Here's a rundown of some of those services:

BigQuery: Google's fully managed and serverless data warehouse for large-scale analytics. It is designed to swiftly analyse large datasets using SQL.

Pub/Sub: A real-time messaging service that allows independent applications to publish and subscribe to messages. Useful in event-driven architectures and streaming analytics.

Dataflow: A fully managed service for stream and batch processing. It's particularly effective in dealing with large volumes of data and for real-time data processing use cases.

Data Studio: A reporting and visualization tool that helps you transform your datasets into reports and data dashboards.

Dataproc: A managed Spark and Hadoop service for big data processing. Useful in building pipelines, running analytics, and performing Machine Learning tasks.

Looker: A business intelligence platform that provides data visualization and business insights. It allows you to analyze and visualize data across multiple sources.

Data Catalog: A fully managed and scalable metadata management service. It provides a unified view of all your datasets across GCP services.

Cloud Data Fusion: An open source, cloud-native data integration platform to build and manage ETL/ELT data pipelines.

Cloud Data Loss Prevention (DLP): Provides a way to discover, classify, and redact sensitive information in your datastores.

Wrapping up

The GCP interview questions covered above can assist candidates with improving their interview preparation, and enable recruiters to evaluate their abilities accurately when hiring GCP developers. They cover basic, medium, and advanced levels and are among the most frequently asked Google Cloud interview questions.

As a developer, attempting the Turing test can provide you with the opportunity to work with top U.S. companies from your home. If you are a recruiter looking to simplify the lengthy interview process, Turing can help you remotely source, evaluate, match, and manage the best software developers globally.

Hire Silicon Valley-caliber Google Cloud developers at half the cost

Turing helps companies match with top quality remote JavaScript developers from across the world in a matter of days. Scale your engineering team with pre-vetted JavaScript developers at the push of a buttton.

Tired of interviewing candidates to find the best developers?

Hire top vetted developers within 4 days.

Leading enterprises, startups, and more have trusted Turing

Check out more interview questions

Hire remote developers

Tell us the skills you need and we'll find the best developer for you in days, not weeks.