.NET

Basic Interview Q&A

1. What is the .NET Framework?

The .NET Framework is a comprehensive software development platform developed by Microsoft. It includes a runtime environment called the Common Language Runtime (CLR) and a rich set of class libraries. It supports multiple programming languages such as C#, VB.NET, and F#, and offers features like memory management, security, and exception handling.

The .NET Framework is primarily used to create applications for Windows, but with the introduction of .NET Core and .NET 5, it can also be used to develop cross-platform applications as well.

2. What is the Common Language Runtime (CLR)?

The Common Language Runtime (CLR) is the execution environment provided by the .NET Framework. It manages the execution of .NET applications, providing services like memory management, code verification, security, garbage collection, and exception handling.

One of the key features of the CLR is the Just-In-Time (JIT) compiler. When a .NET application is executed, the CLR uses the JIT compiler to convert the Intermediate Language (IL) code—a low-level, platform-agnostic programming language—into native machine code specific to the system the application is running on. This process happens at runtime, hence the term "Just-In-Time". This allows .NET applications to be platform-independent until they are executed, providing a significant advantage in terms of portability and performance.

3. Explain the difference between value types and reference types in .NET.

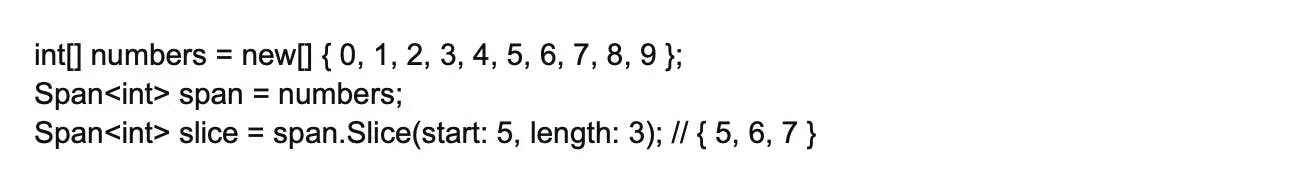

In .NET, data types are divided into two categories: value types and reference types. The primary difference between them lies in how they store their data and how they are handled in memory.

Value types directly contain their data and are stored on the stack. They include primitive types such as int, bool, float, double, char, decimal, enum, and struct. When a value type is assigned to a new variable, a copy of the value is made. Therefore, changes made to one variable do not affect the other.

Reference types, on the other hand, store a reference to the actual data, which is stored on the heap. They include types such as class, interface, delegate, string, and array. When a reference type is assigned to a new variable, the reference is copied, not the actual data. Therefore, changes made to one variable will affect the other, as they both point to the same data.

Understanding the difference between value types and reference types is crucial for efficient memory management and performance optimization in .NET applications.

4. What is the purpose of the System.IO namespace in .NET?

The System.IO namespace in .NET is a fundamental part of the framework that provides classes and methods for handling input/output (I/O) operations. These operations include reading from and writing to files, data streams, and communication with devices like hard drives and network connections.

The System.IO namespace includes a variety of classes that allow developers to interact with the file system and handle data streams efficiently. Some of the key classes include:

File: Provides static methods for creating, copying, deleting, moving, and opening files.

Directory: Provides static methods for creating, moving, and enumerating through directories and subdirectories.

FileStream: Provides a stream for a file, supporting both synchronous and asynchronous read and write operations.

StreamReader and StreamWriter: These classes are for reading from and writing to character streams.

BinaryReader and BinaryWriter: These classes are for reading from and writing to binary streams.

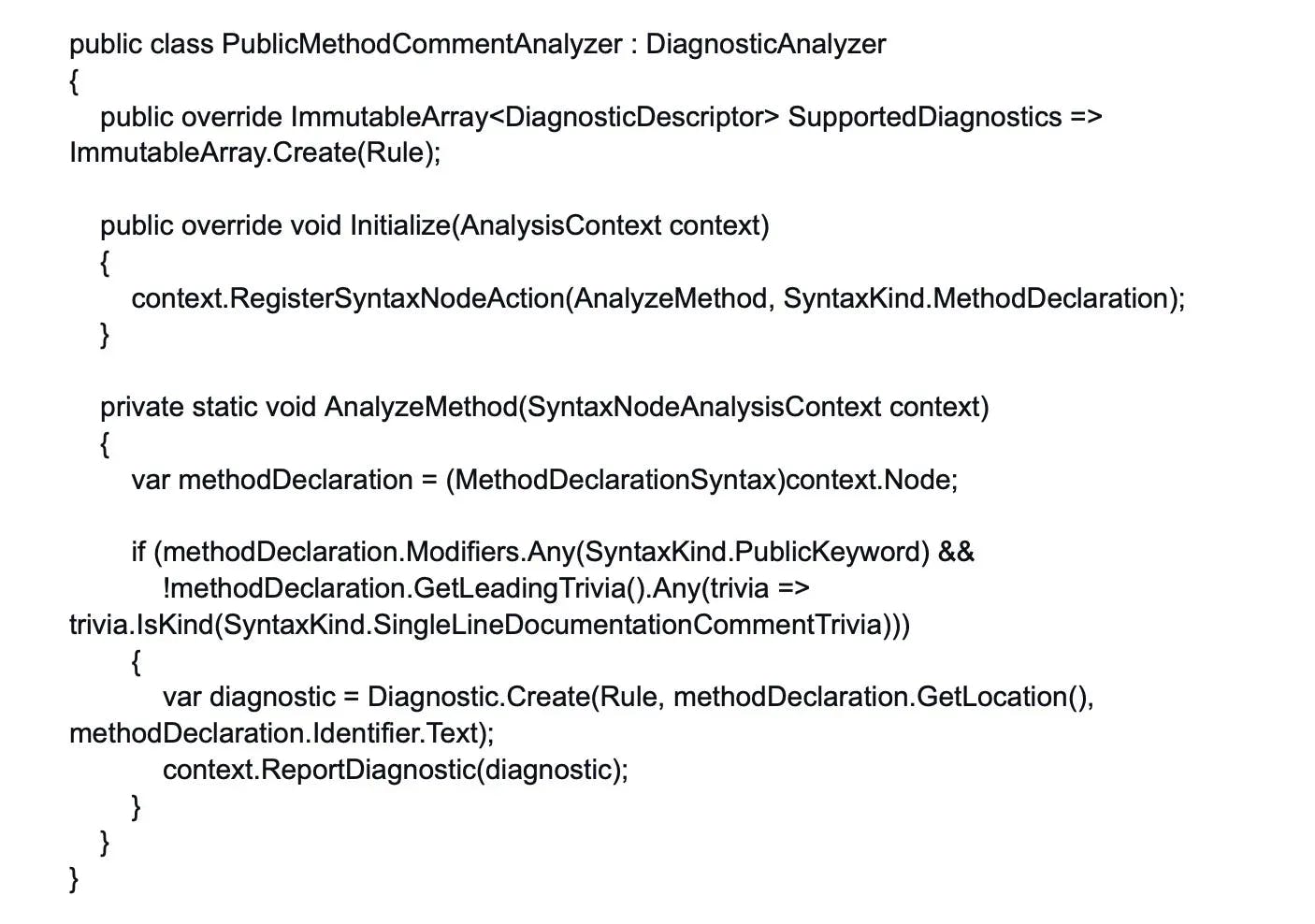

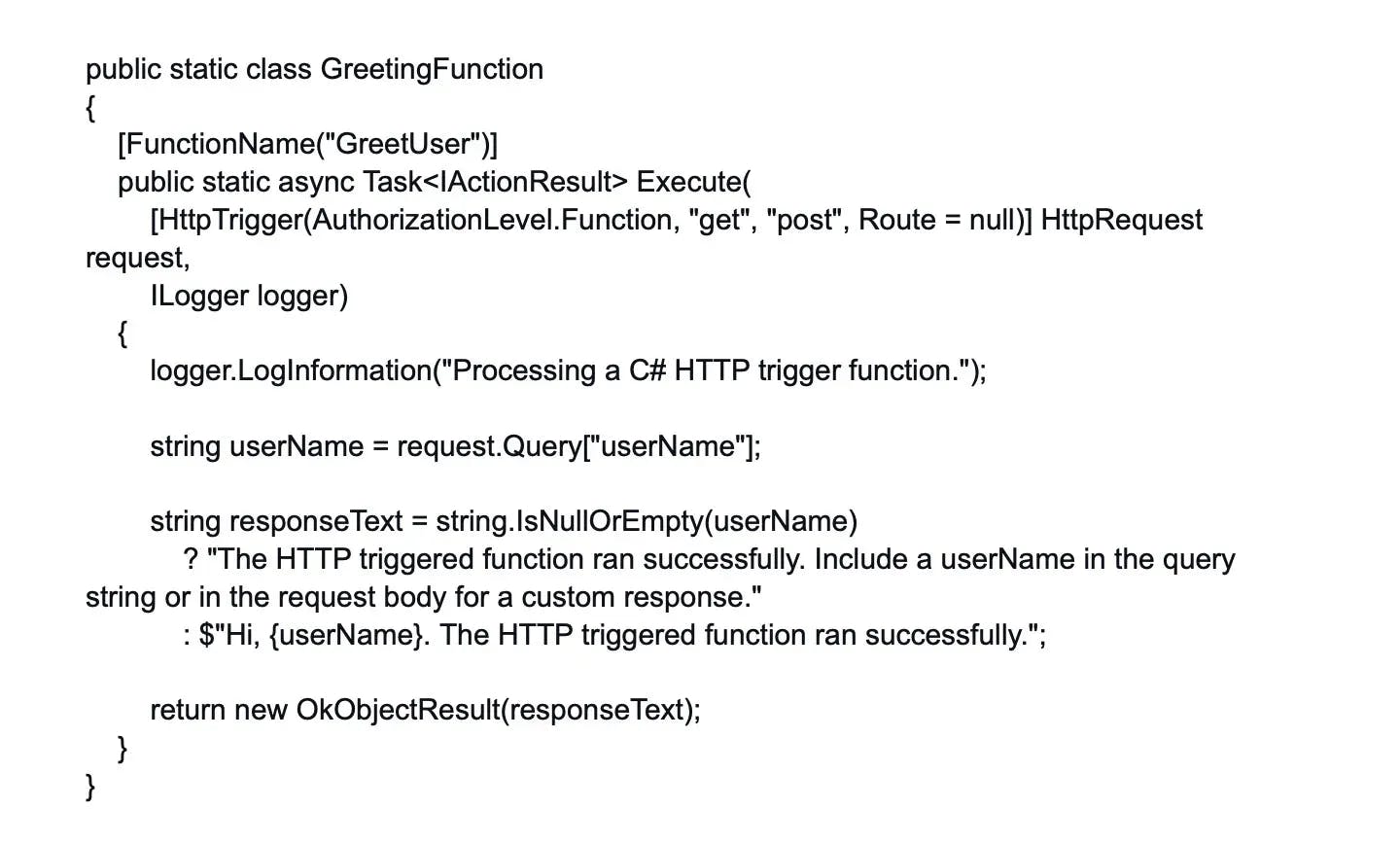

5. How does the concept of attributes facilitate metadata in .NET?

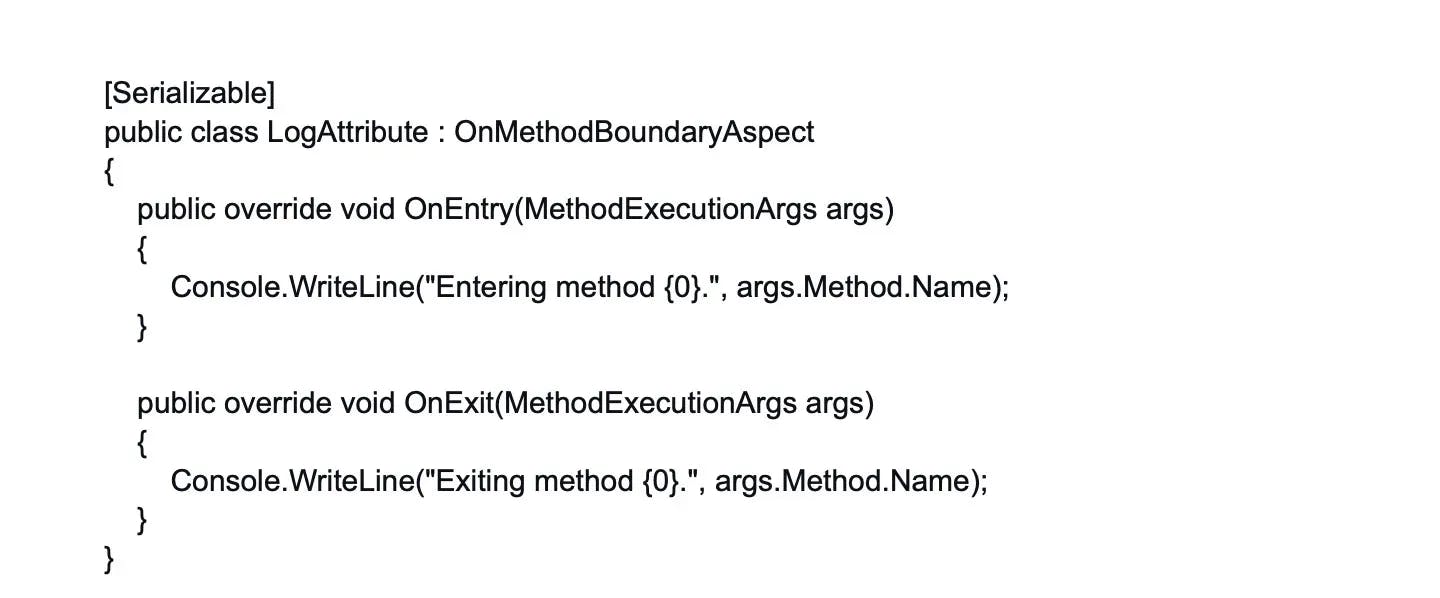

Attributes in .NET are powerful constructs that allow developers to add metadata—additional descriptive information—to various elements in the code, such as classes, methods, properties, and more. This metadata can be accessed at runtime using reflection, allowing for dynamic and flexible programming.

Attributes have square brackets [] and are placed above the code elements they're related to. They can be utilised to control behaviour, provide additional information, or introduce extra functionality.

In the example above, the [Serializable] attribute is used to indicate that the MyExampleClass class can be serialized, a capability often crucial for storage or network transmission.

In addition to using predefined attributes such as serialization, compilation, marshalling, etc., .NET allows creating custom attributes to meet specific needs. This makes attributes a versatile and integral part of .NET, promoting declarative programming and code readability.

6. Explain the role of the ConfigurationManager class in .NET configuration management.

In .NET, the ConfigurationManager class is a vital part of the System.Configuration namespace and plays a crucial role in managing configuration settings. It is commonly used to read application settings, connection strings, or other configurations from the App.config (for Windows Applications) or the Web.config (for Web Applications) files.

These configuration files store key-value pairs in XML format. By using the ConfigurationManager, developers can easily access this data without having to directly parse the XML file. The data is cached, so subsequent requests for the same value are highly efficient.

Here's a simple example of how ConfigurationManager could be used to read an application setting:

In this example, "MyConnectionString" would be a key in the App.config or Web.config file.

However, it's important to note that the ConfigurationManager class only supports read operations for standard application settings. If you need to write or update configuration settings, you'll need to use the Configuration class instead. Furthermore, ConfigurationManager is not available in .NET Core and .NET 5+ projects and is replaced by the Configuration model provided by the Microsoft.Extensions.Configuration namespace.

7. What is the difference between an exe and a dll file in .NET?

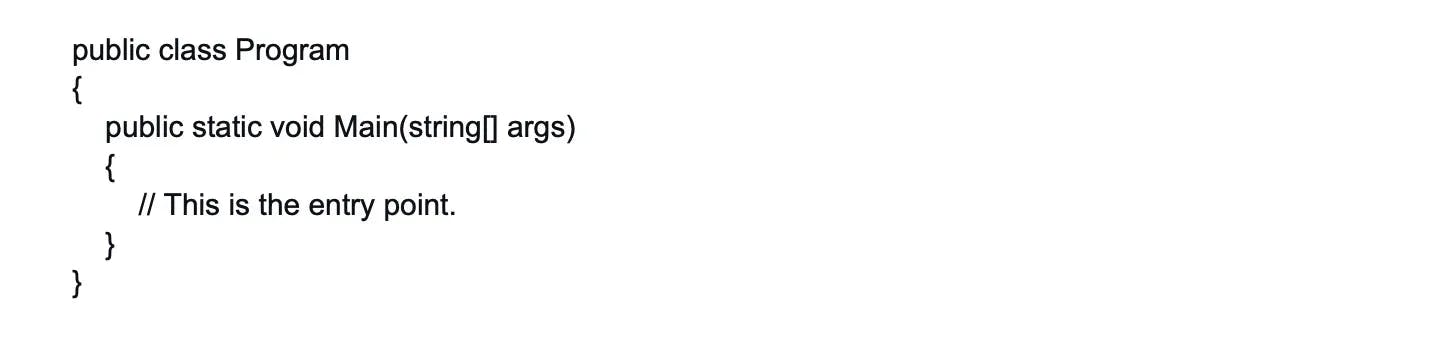

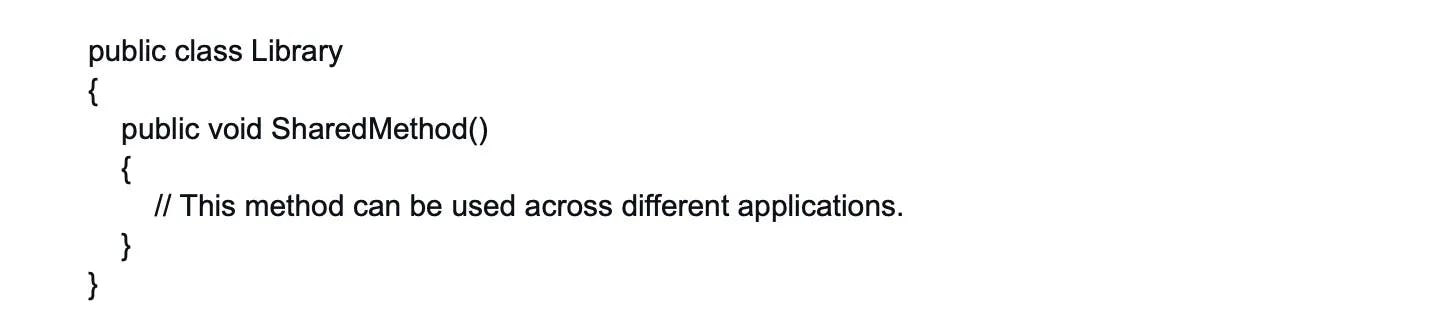

An exe (executable) file contains an application's entry point and is intended to be executed directly. It represents a standalone program.

On the other hand, a dll (dynamic-link library) file contains reusable code that can be referenced and used by multiple applications. It allows for code sharing and modular development.

At runtime, the Common Language Runtime (CLR) loads and executes the exe's code and loads the corresponding dll into memory as needed when a call to a dll's functionality is made.

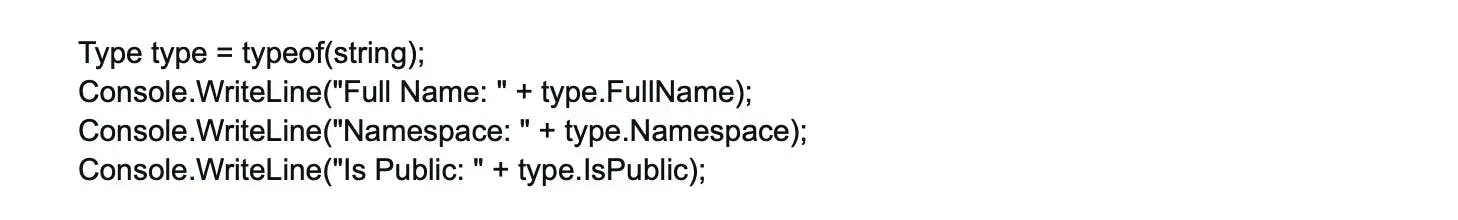

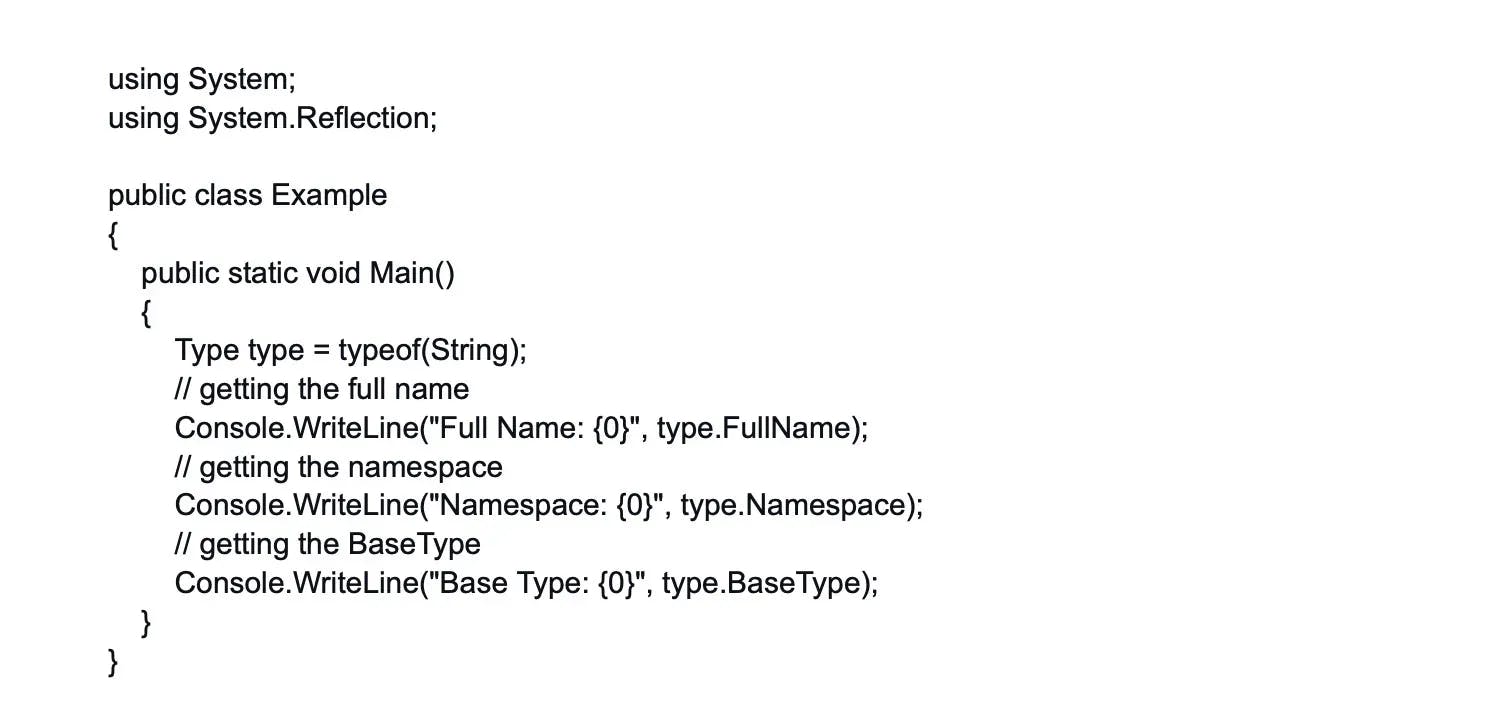

8. What is the purpose of the System.Reflection namespace in .NET?

The System.Reflection namespace provides classes and methods to inspect and manipulate metadata, types, and assemblies at runtime. It enables developers to dynamically load assemblies, create instances, invoke methods, and perform other reflection-related operations.

It's frequently used in scenarios where types are unknown at compile time, for e.g. in building plugin architectures, performing serialization/deserialization, implementing late binding, or performing type analysis and metadata visualization.

Here is a simple example of using Reflection to get information about a type:

However, it's important to note that with great power comes great responsibility; due to its ability to uncover private data and call private methods, Reflection should be used judiciously and carefully to avoid compromising security or integrity.

9. Explain the concept of serialization and deserialization in .NET.

Serialization is the process of converting an object into a stream of bytes to store or transmit it. Deserialization is the reverse process of reconstructing the object from the serialized bytes.

These mechanisms allow objects to be persisted, transferred over a network, or shared between different parts of an application.

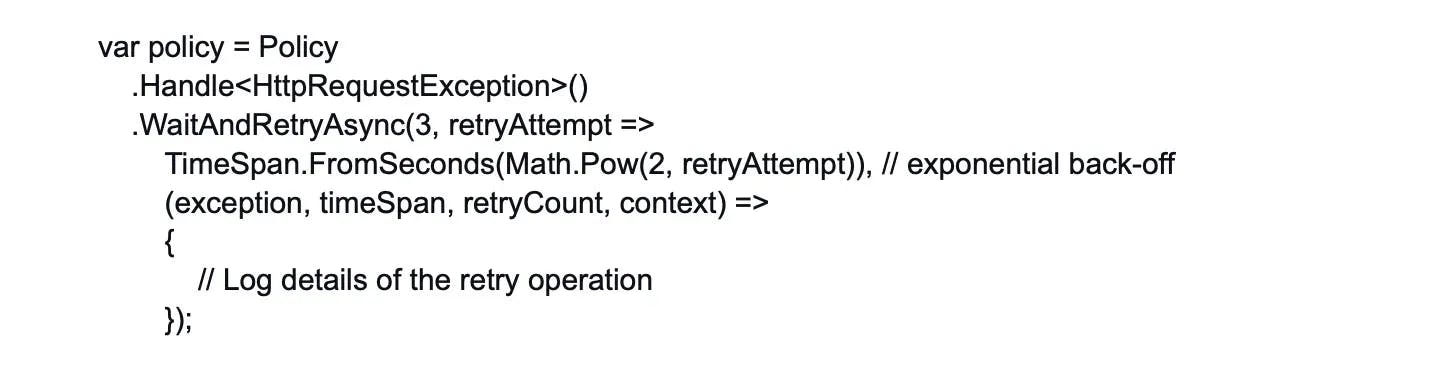

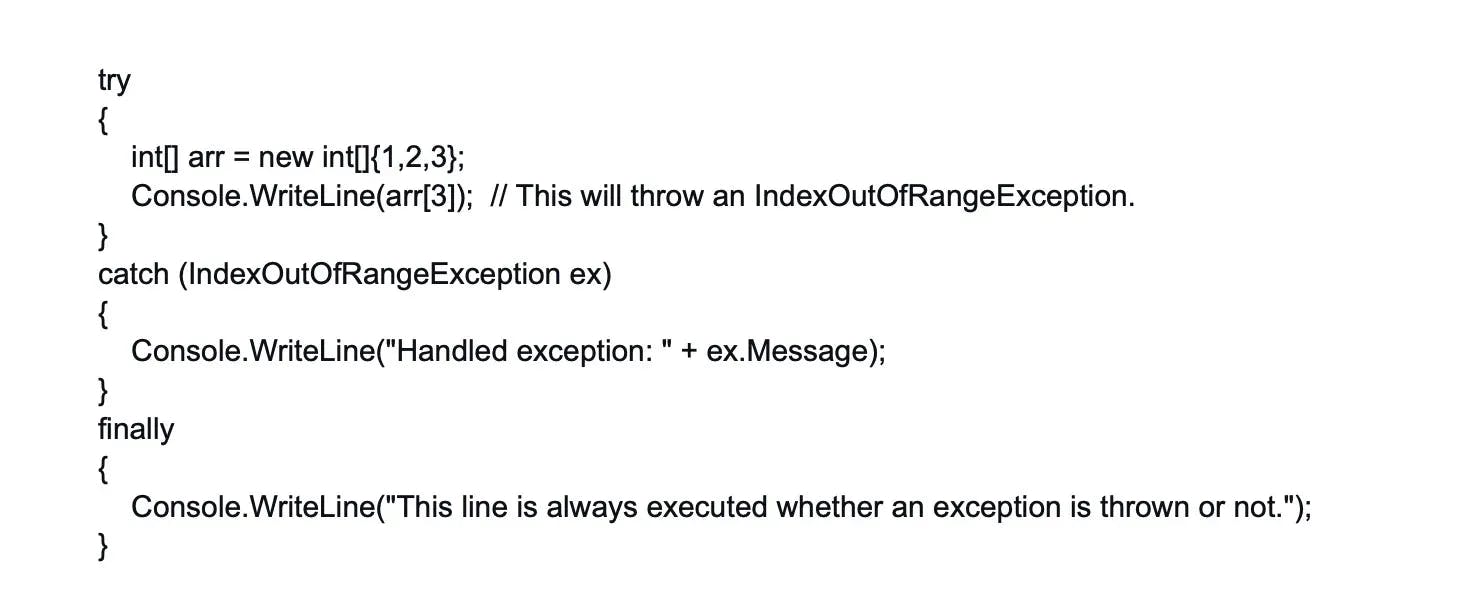

10. What are the different types of exceptions in .NET and how are they handled?

There are various types of exceptions in .NET, all of which derive from the base System.Exception class. Some commonly used exceptions include System.ApplicationException, System.NullReferenceException, System.IndexOutOfRangeException, System.DivideByZeroException, and more.

In .NET, exceptions are handled using try-catch-finally blocks:

try: The try block contains the code segment that may throw an exception.

catch: The catch block is used to capture and handle exceptions if they occur. You can have multiple catch blocks for a single try block to handle different exception types separately.

finally: The finally block is optional and contains the code segment that should be executed irrespective of an error occurring. This generally contains cleanup code.

Here's an example showing how to handle exceptions:

11. What are assemblies in .NET?

Assemblies are the building blocks of .NET applications. They are self-contained units that contain compiled code (executable or library), metadata, and resources.

Each assembly contains a block of data called a 'manifest'. The manifest contains metadata about the assembly, such as:

- Assembly name and version.

- Security information.

- Information about the types and resources in the assembly.

- The list of referenced assemblies.

There are two types of assemblies in .NET:

Static or Process Assemblies: These are .exe or .dll files that are stored on disk and load directly into the memory when needed. Most assemblies are static.

Dynamic Assemblies: These assemblies are not saved to disk before execution. They are run directly from memory and are typically used for temporary tasks in the application.

Assemblies can be either private (used within a single application) or shared (used by multiple applications). They enable code reuse, versioning, and deployment.

12. What is the Global Assembly Cache (GAC)?

The Global Assembly Cache (GAC) is a central repository in the .NET Framework where shared assemblies are stored. It provides a way to store and share assemblies globally on a computer so that multiple applications can use them.

Assemblies must have a strong name—essentially a version number and a public key—to be stored in the GAC. This ensures the uniqueness of each assembly in the cache.

The GAC ensures versioning and allows different applications to reference the same assembly without maintaining multiple copies. Also, starting with .NET Core, the concept of the GAC has been removed to allow side-by-side installations of .NET versions and to minimize system-wide impact.

13. What is the role of globalization and localization in .NET?

Globalization refers to designing and developing applications that can adapt to different cultures, languages, and regions. Localization is the process of customizing an application to a specific culture or locale.

In .NET, globalization and localization are supported through features like resource files, satellite assemblies, and the CultureInfo class, allowing applications to display localized content and handle cultural differences.

14. What is the Common Type System (CTS)?

The Common Type System (CTS) is a set of rules and guidelines defined by the .NET Framework that ensure interoperability between different programming languages targeting the runtime.

It defines a common set of types, their behavior, and their representation in memory. The CTS allows objects to be used across different .NET languages without compatibility issues.

The CTS broadly classifies types into two categories:

Value Types: These include numeric data types, Boolean, char, date, time, etc. Value types directly store data and each variable has its own copy of the data.

Reference Types: These include class, interface, array, delegate, etc. Reference types store a reference to the location of the object in memory.

15. Explain the concept of garbage collection in .NET.

Garbage collection is an automatic memory management feature in the .NET Framework. It relieves developers from manual memory allocation and deallocation.

The garbage collector tracks objects in memory and periodically frees up memory occupied by objects that are no longer referenced. It ensures efficient memory usage and helps prevent memory leaks and access violations.

The garbage collector uses a generational approach to manage memory more efficiently. It categorizes objects into three generations:

Generation 0: This is the youngest generation that consists of short-lived objects, such as temporary variables.

Generation 1: This generation is used as a buffer between short-lived objects and long-lived objects.

Generation 2: This generation comprises long-lived objects. Collection occurs less frequently in this generation compared to the other generations.

It's important to note that while the garbage collector helps in managing memory, developers still need to ensure that they're writing optimized code and managing non-memory resources like file handles or database connections efficiently.

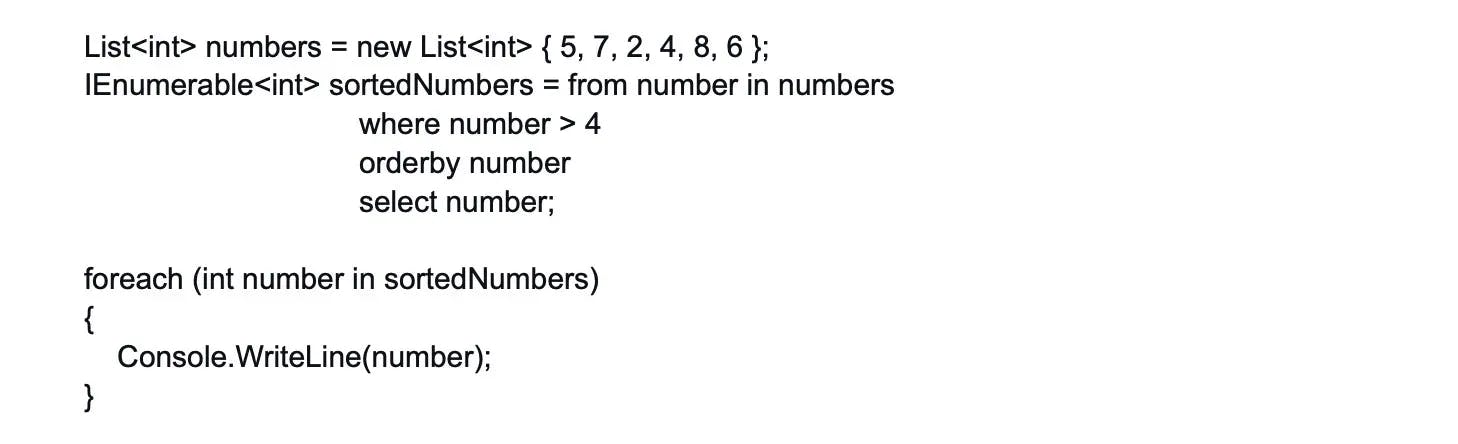

16. What are the different data access technologies available in .NET?

.NET Framework provides a variety of data access technologies for interacting with data sources such as databases and XML files. Here are some key ones:

ADO. NET

ADO.NET is a set of classes that provides data access services for .NET Framework applications. It lets applications interact with relational databases like SQL Server, Oracle, and MySQL using a connection-oriented model. ADO.NET supports various features, including connection management, query execution, data retrieval, and transaction handling.

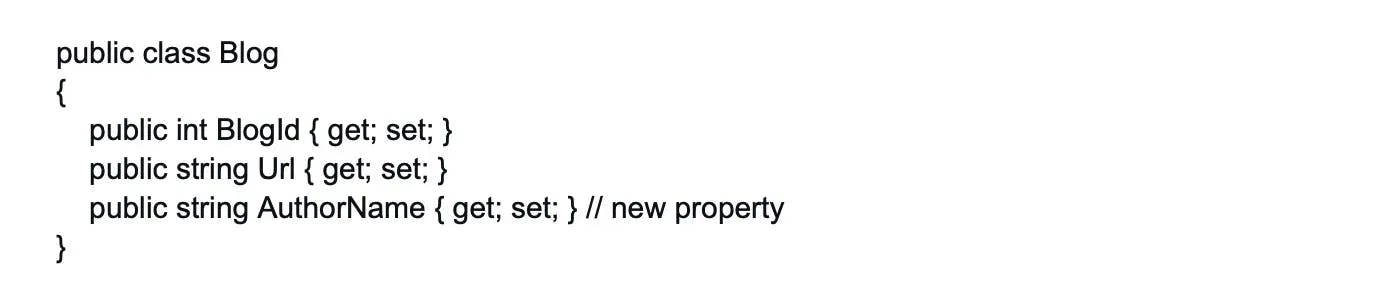

Entity Framework (EF)

Entity Framework is an open-source Object-Relational Mapping (ORM) framework for .NET applications provided by Microsoft. It enables developers to work with data as objects and properties. EF allows for database manipulations (like CRUD operations) using .NET objects, and automatically transforms these operations to SQL queries. Entity Framework Core (EF Core) is a lightweight, extensible, and cross-platform version of EF.

LINQ to SQL

LINQ to SQL is a component of .NET Framework that specifically provides a LINQ-based solution for querying and manipulating SQL Server databases as strongly typed .NET objects. It's a simple ORM that maps SQL Server database tables to .NET classes, allowing developers to manipulate data directly in .NET.

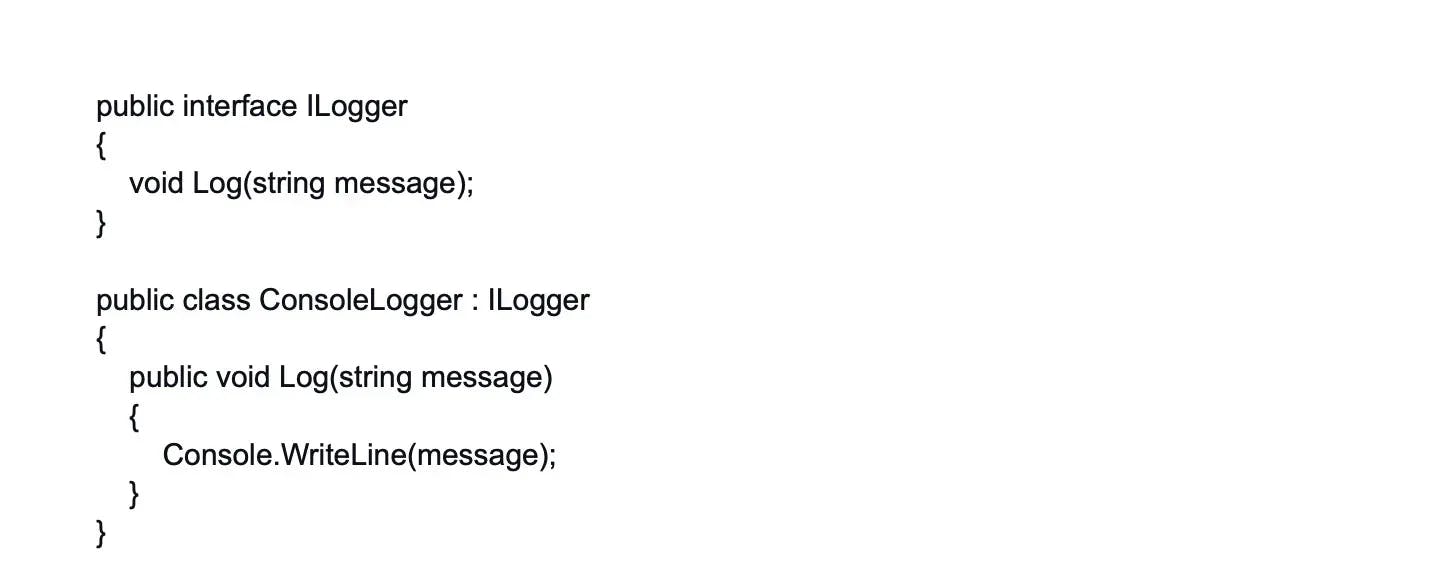

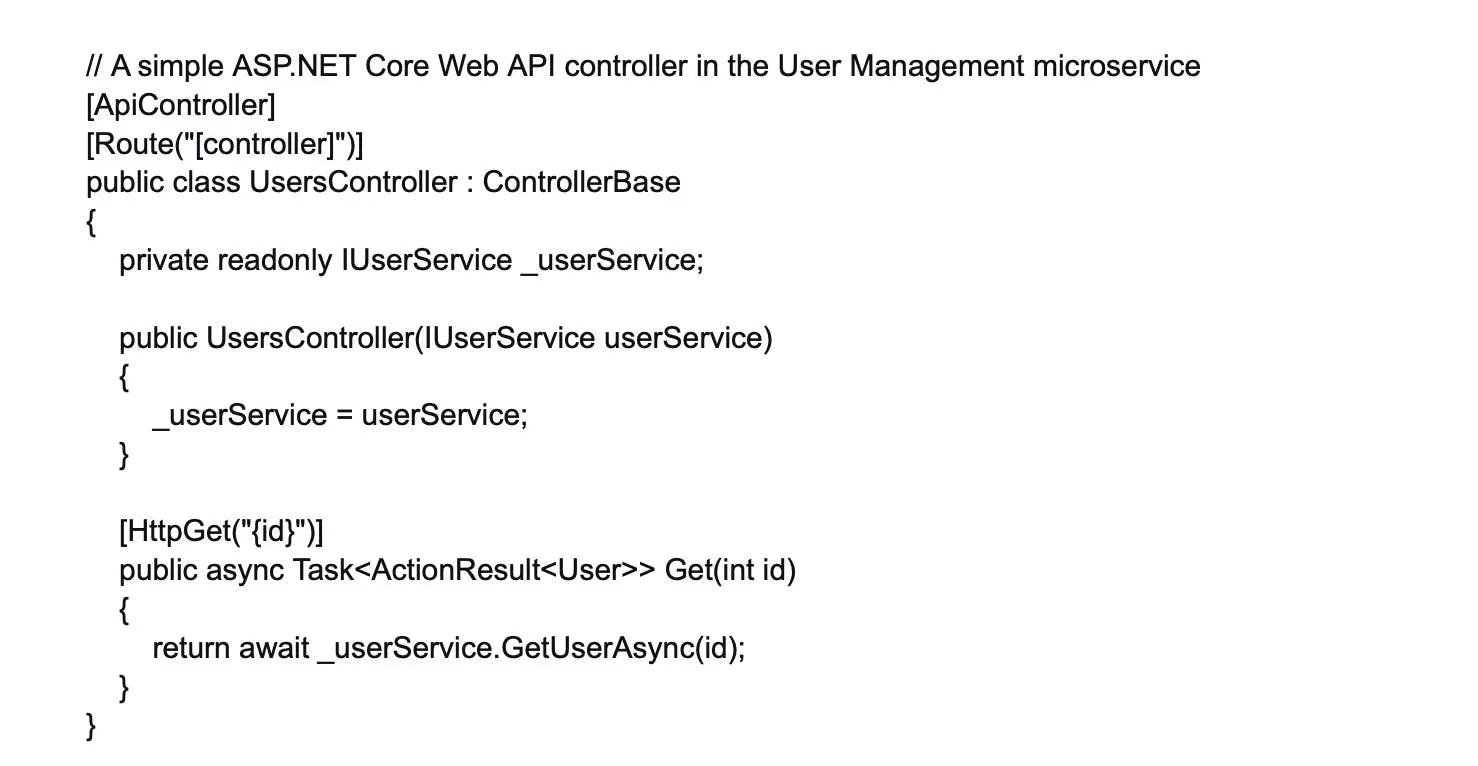

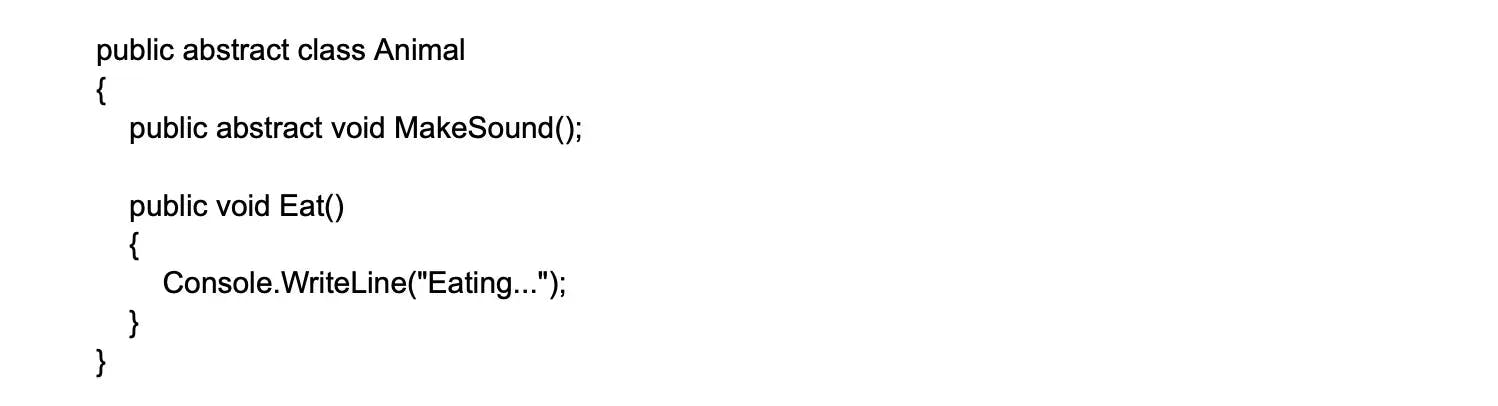

17. What is the difference between an interface and an abstract class in .NET?

An interface defines a contract of methods, properties, and events that a class must implement. It allows multiple inheritance and provides a way to achieve polymorphism. It's important to note that interface members are implicitly public, and they can't contain any access modifiers.

In this example, any class that implements IAnimal is obliged to provide an implementation of MakeSound.

An abstract class is a class that cannot be instantiated and serves as a base for other classes. It can contain abstract and non-abstract members. Unlike interfaces, abstract classes can provide default implementations and are useful when there is a common behavior shared among derived classes.

In this example, classes that inherit Animal will have to provide an implementation of MakeSound. However, they will inherit the Eat method as it is.

18. What is the role of the Common Intermediate Language (CIL) in the .NET Framework?

The Common Intermediate Language (CIL), formerly known as Microsoft Intermediate Language (MSIL), plays a crucial role in the .NET Framework. When you compile your .NET source code, it is not directly converted into machine code. Instead, it is first translated into CIL, an intermediate language that is platform-agnostic. This means it can run on any operating system that supports .NET, making your .NET applications cross-platform.

The CIL code is a low-level, human-readable programming language that is closer to machine language than high-level languages like C# or VB.NET. During runtime, the .NET Framework's Common Language Runtime (CLR) takes this CIL code and compiles it into machine code using Just-In-Time (JIT) compilation.

19. Define the concept of Just-In-Time (JIT) compilation in .NET.

JIT compilation is a process in which the CLR compiles CIL code into machine code at runtime, just before it is executed. This helps in optimizing performance by translating CIL into instructions that the underlying hardware can execute directly.

20. What are the different types of collections available in the System.Collections namespace?

The System.Collections namespace provides various collection types in .NET including ArrayList, HashTable, SortedList, Stack, and Queue. These collections offer different ways to store and access data.

21. What is the purpose of the System.Diagnostics namespace in .NET?

The System.Diagnostics namespace provides classes for interacting with system processes, events, performance counters, and debugging functionality in .NET. It allows developers to control and monitor processes, gather performance data, handle exceptions, and perform debugging tasks.

Here are some of the key classes and their purposes:

Process: Allows you to start and stop system processes, and also provides access to process-specific information such as the process ID, priority, and the amount of memory being used.

EventLog: Enables you to read from and write to the event log, which is a vital tool for monitoring system and application events.

PerformanceCounter: Allows you to measure the performance of your application by monitoring system-defined or application-defined performance counters.

Debug and Trace: These classes provide a set of methods and properties that help you debug your code and trace the execution of your application.

Stopwatch: Provides a set of methods and properties that you can use to accurately measure elapsed time.

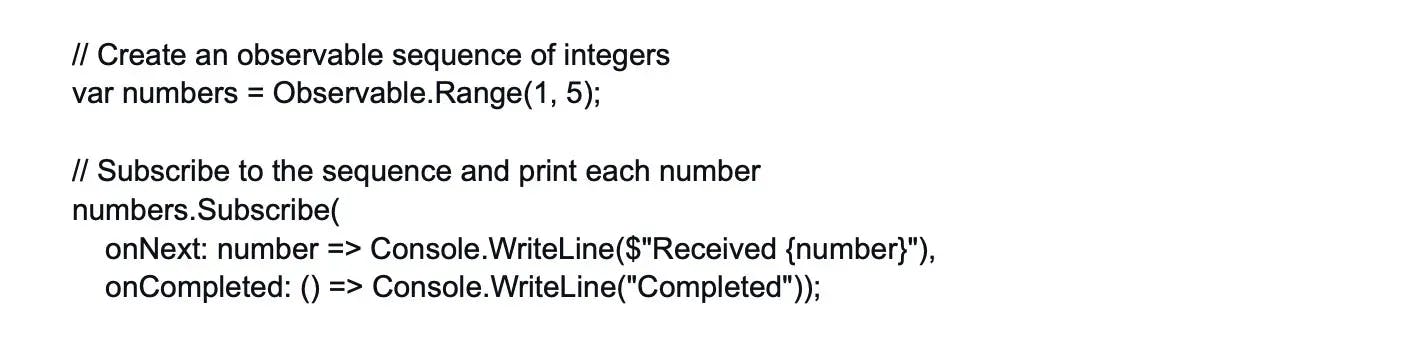

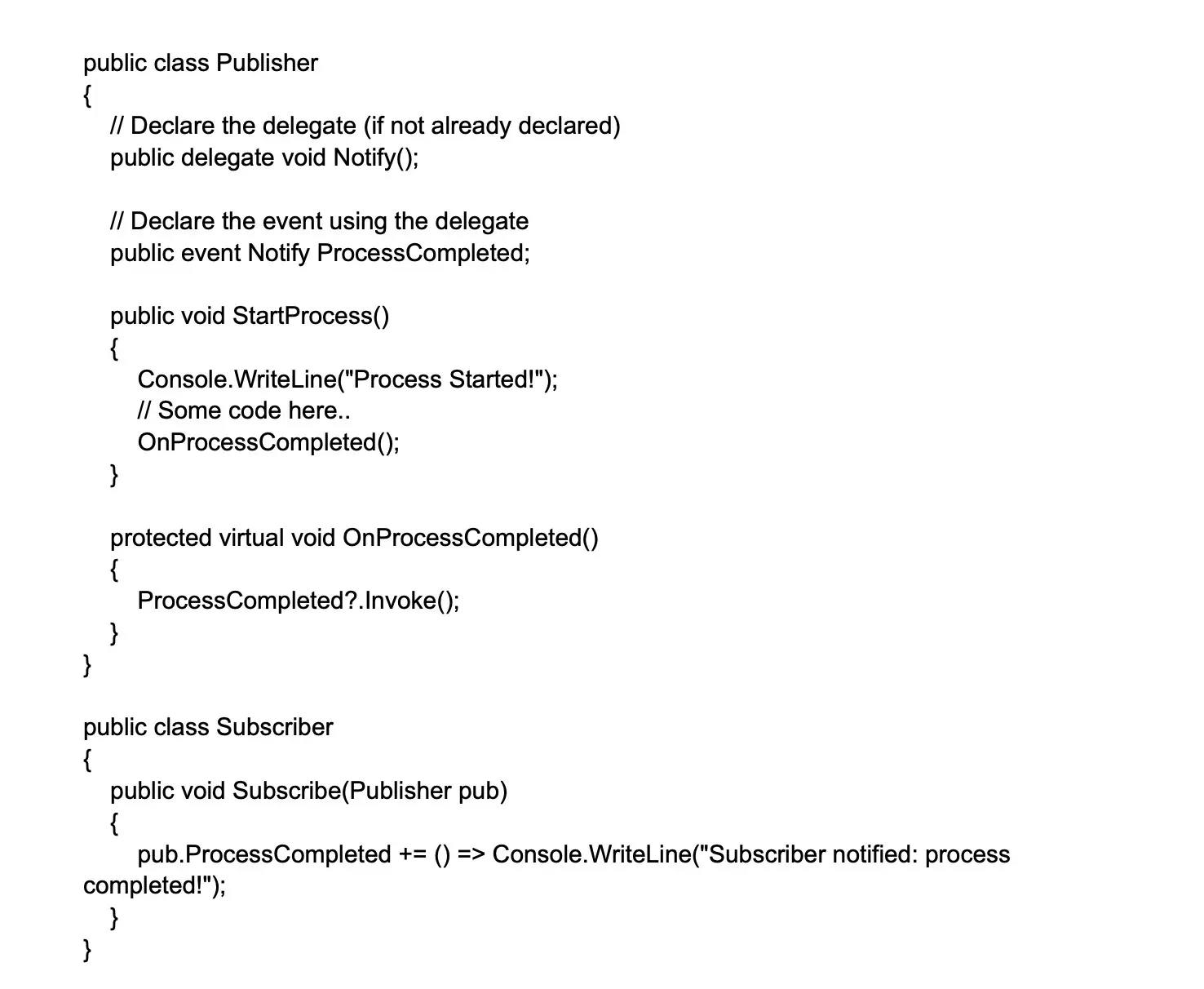

22. Explain the concept of delegates and events in .NET.

Delegates in .NET are reference types that hold references to methods with a specific signature. They allow methods to be treated as entities that can be assigned to variables or passed as arguments to other methods.

Events, on the other hand, are a language construct built on top of delegates. They provide a way for objects to notify other objects when a particular action or state change occurs. The class that sends (or raises) the event is called the publisher and the classes that receive (or handle) the event are called subscribers. Events encapsulate delegates and provide a standard pattern for handling notifications in a decoupled and extensible manner.

Here's a simple example of an event:

In this example, the Publisher class has an event ProcessCompleted that is raised when a process is completed. The Subscriber class subscribes to this event and provides a handler that is called when the event is raised. This allows the Subscriber to be notified whenever the Publisher completes a process, without the Publisher needing to know anything about the Subscriber. This is a fundamental part of the event-driven programming paradigm.

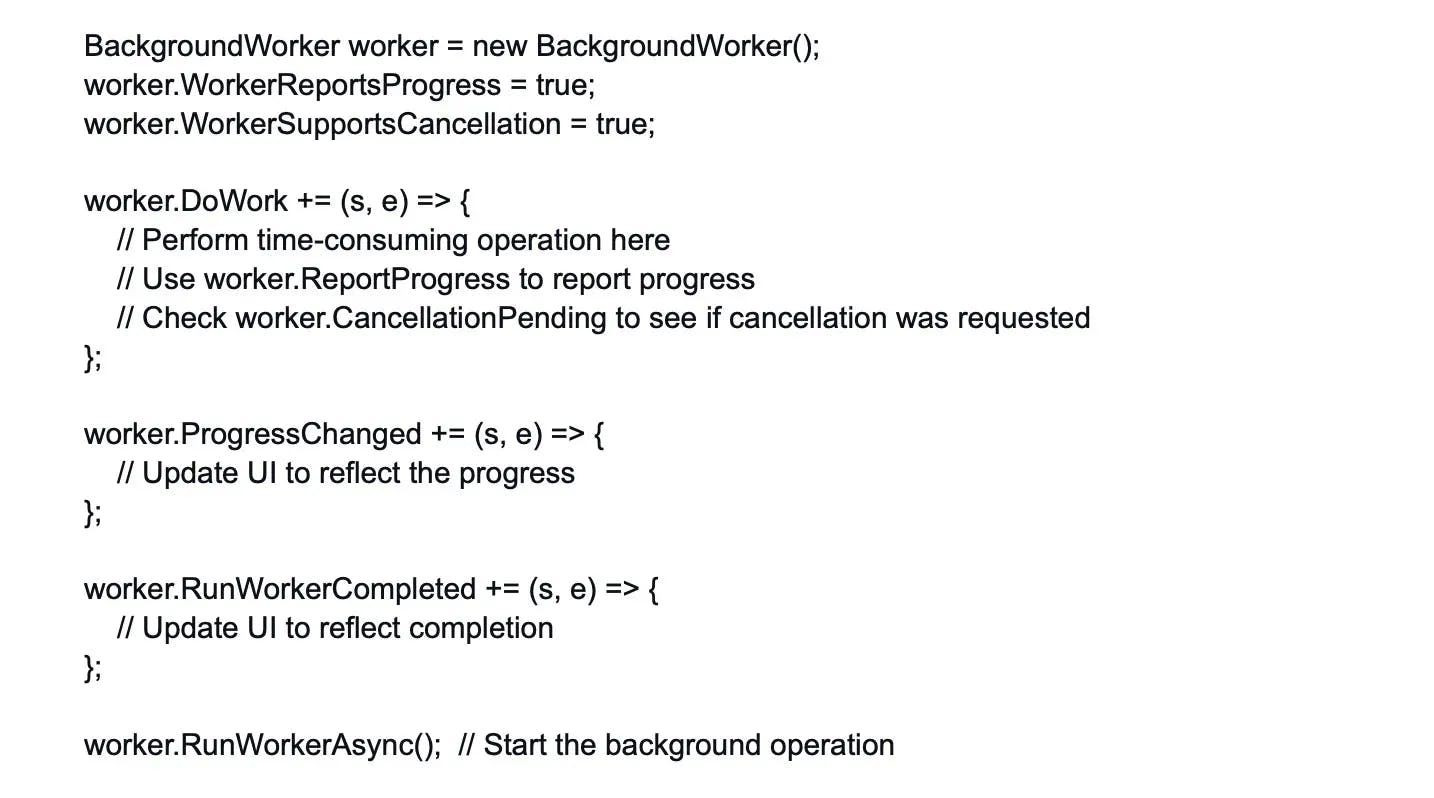

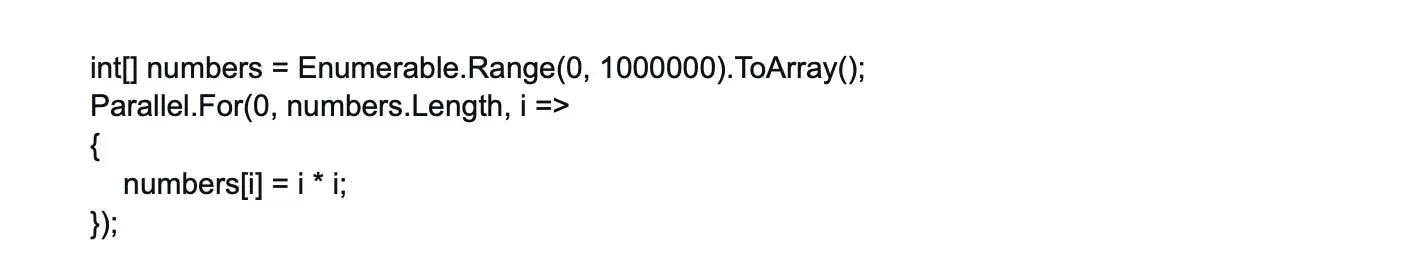

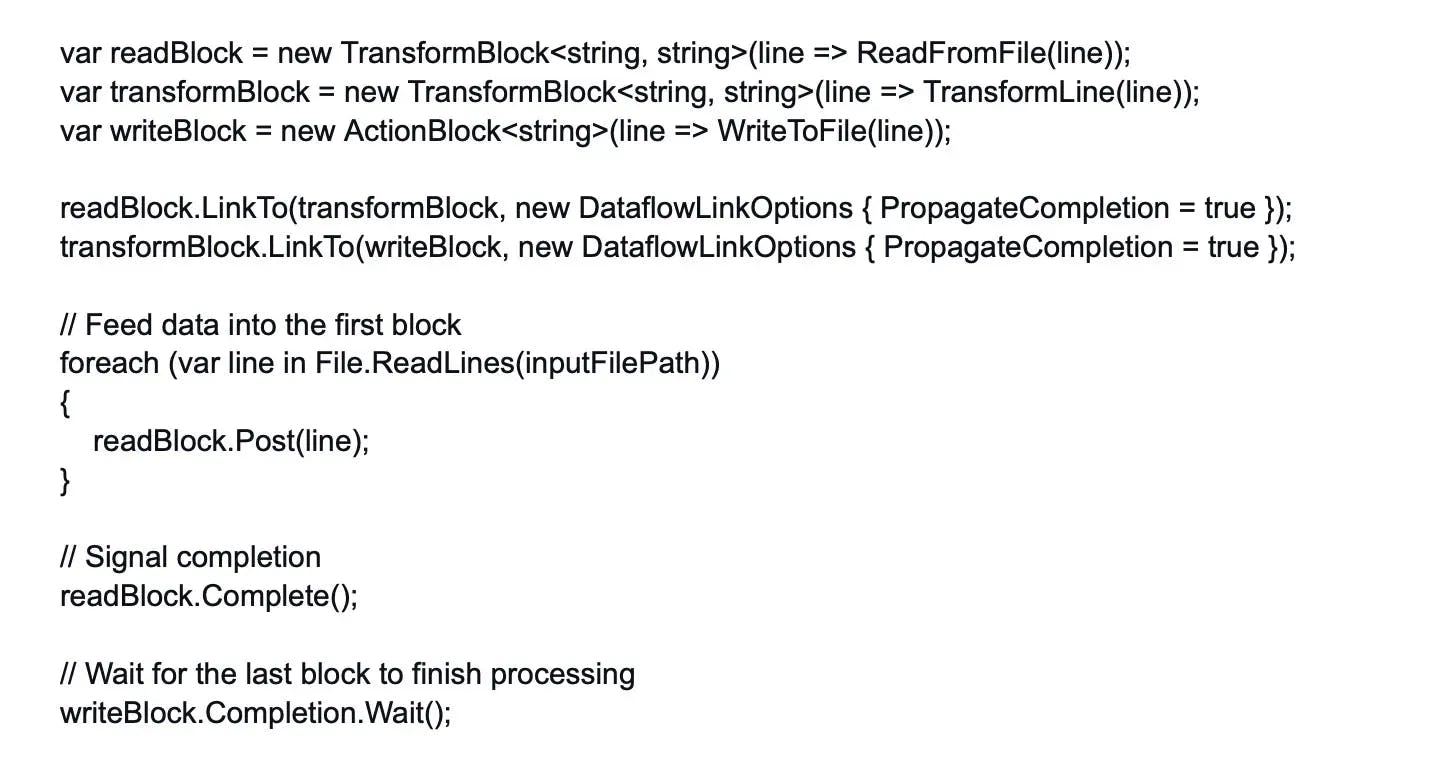

23. What is the role of the System.Threading namespace in .NET multithreading?

The System.Threading namespace in .NET provides classes and constructs for creating and managing multithreaded applications. It offers types such as Thread, ThreadPool, Mutex, Monitor, and Semaphore, which allow developers to control thread execution, synchronize access to shared resources, and coordinate communication between threads.

24. What is the purpose of the using statement in C#? How does it relate to resource management?

The using statement in C# is used for the automatic disposal of unmanaged resources, such as database connections, file streams, or network sockets, that implement the IDisposable interface. It ensures that the Dispose method of the resource is called when the code block within the using statement is exited, even in the presence of exceptions. It simplifies resource management and helps prevent resource leaks by providing a convenient syntax for working with disposable objects.

25. Explain the concept of boxing and unboxing in .NET.

Boxing is the process of converting a value type to the corresponding reference type representation on the heap, such as converting an integer to an object. Unboxing, on the other hand, is the reverse process of extracting the value type from the boxed object. Boxing is necessary when a value type needs to be treated as an object, for example, when passing value types to methods that accept object parameters. Unboxing allows retrieving the value from the boxed object to perform value-specific operations.

26. What are extension methods in C# and how are they used?

Extension methods in C# allow developers to add new methods to existing types without modifying their source code. They are defined as static methods within a static class, and the first parameter of the extension method specifies the type being extended, preceded by the 'this' keyword. Extension methods enable adding functionality to types without inheritance or modifying the type hierarchy, making it easier to extend third-party or framework classes.

27. What is the purpose of the System.Net.Sockets namespace in .NET networking?

The System.Net.Sockets namespace provides classes for network programming, particularly for creating client and server applications that communicate over TCP/IP or UDP protocols. It includes classes like TcpClient, TcpListener, UdpClient, and Socket, which enable developers to establish network connections, send and receive data, and handle network-related operations in .NET.

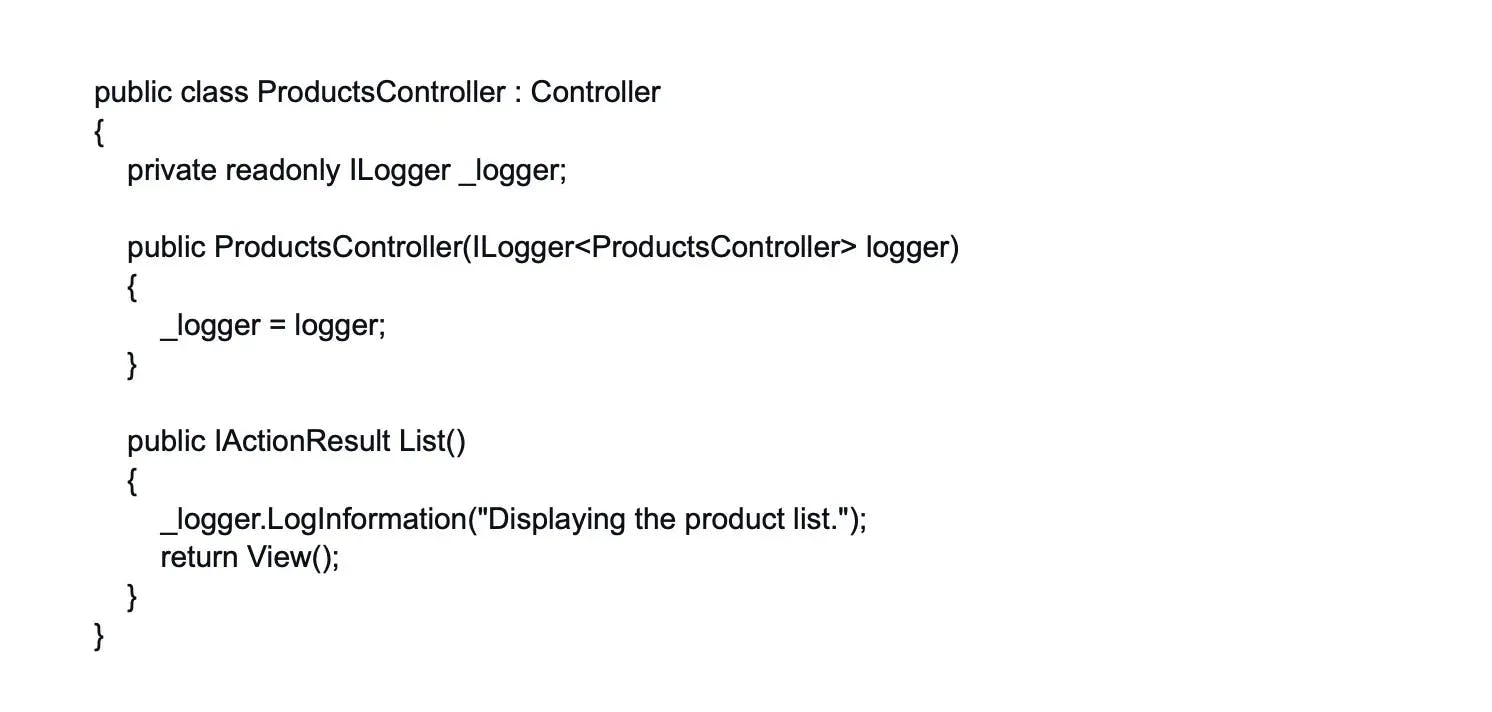

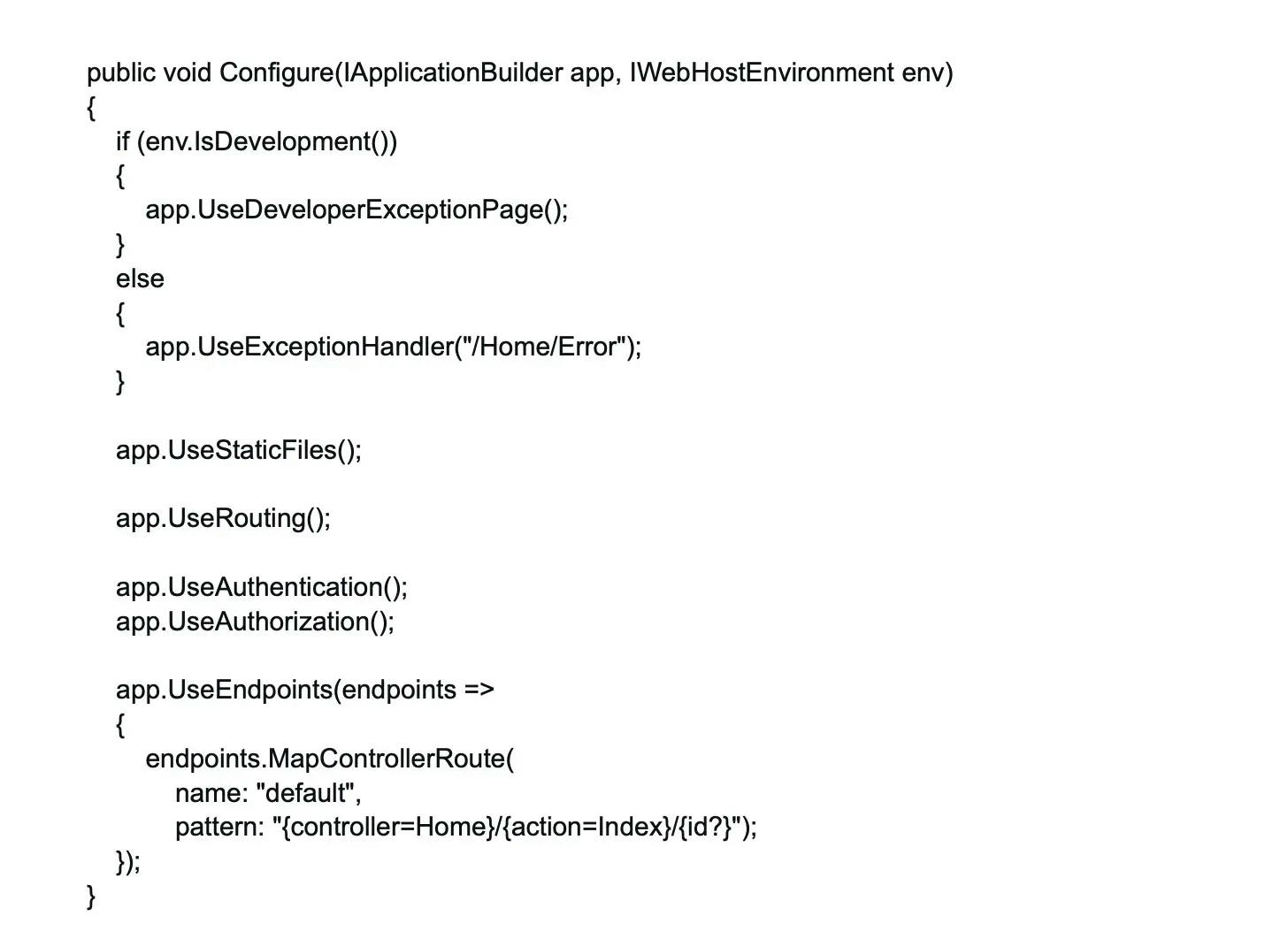

28. Explain the concept of inversion of control (IoC). How is it achieved in .NET?

Inversion of Control (IoC) is a design principle that promotes loose coupling and modularity by inverting the traditional flow of control in software systems. Instead of objects creating and managing their dependencies, IoC delegates the responsibility of creating and managing objects to a container or framework. In .NET, IoC is commonly achieved through frameworks like Dependency Injection (DI) containers, where dependencies are injected into objects by the container, enabling flexible configuration and easier testing.

29. What is the difference between string and StringBuilder in .NET?

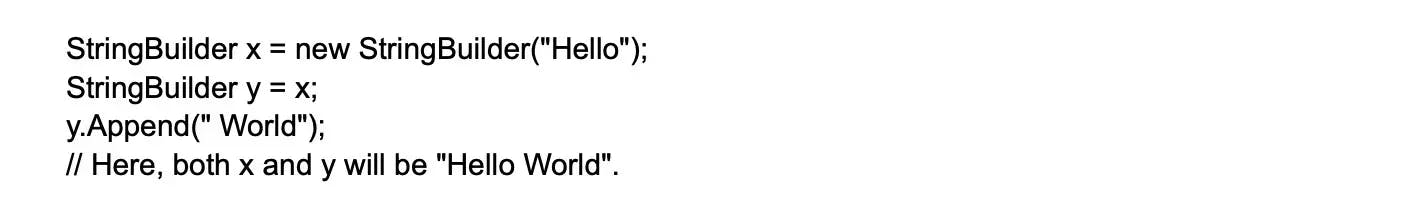

In .NET, both string and StringBuilder are used to work with strings, but they behave differently.

A string is an immutable object. This means once a string object is created, its value cannot be changed. When you modify a string (for example, by concatenating it with another string), a new string object is created in memory to hold the new value. This can lead to inefficiency if you're performing a large number of string manipulations.

On the other hand, StringBuilder is mutable. When you modify a StringBuilder object, the changes are made to the existing object itself, without creating a new one. This makes StringBuilder more efficient for scenarios where you need to perform extensive manipulations on a string.

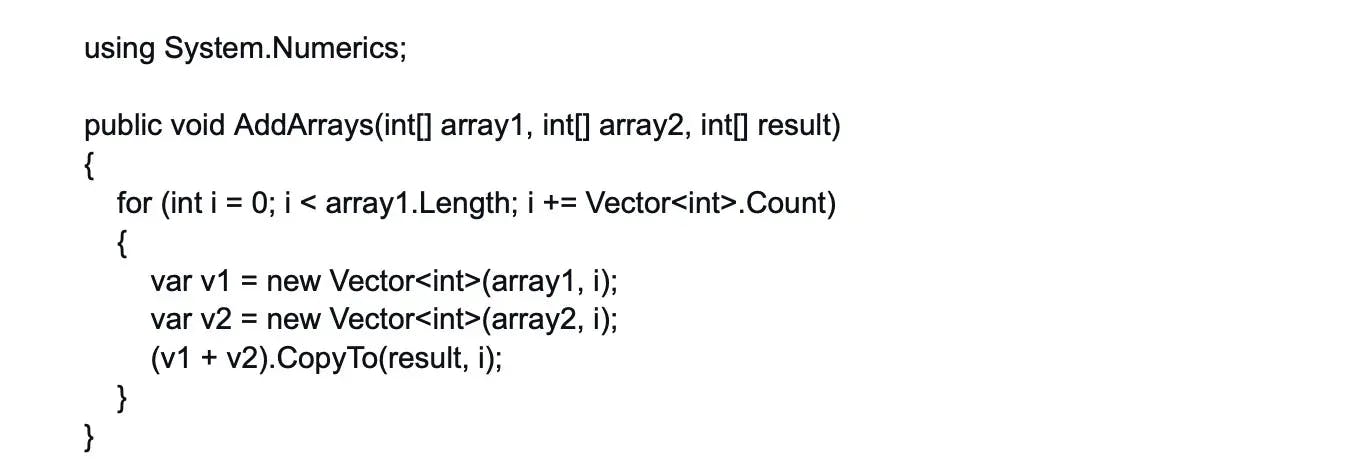

30. Explain the concept of operator overloading in C# and provide an example.

Operator overloading in C# allows developers to define and customize the behavior of operators for user-defined types. It provides the ability to redefine the behavior of operators such as +, -, *, /, ==, and != to work with custom types. For example, a developer can overload the + operator for a custom Vector class to define vector addition, allowing expressions like vector1 + vector2 to perform the desired addition operation based on the semantics of the Vector class.

Wrapping up

While hiring managers can utilize these questions to identify qualified applicants that match their job requirements, .NET developers can use this resource to improve their preparedness and confidence throughout the recruiting process.

If you are looking to hire .NET developers or apply for remote .NET jobs, join Turing, an AI-powered deep-vetting talent platform that matches companies with the engineering talent they need to succeed.

Hire Silicon Valley-caliber .NET developers at half the cost

Turing helps companies match with top quality remote JavaScript developers from across the world in a matter of days. Scale your engineering team with pre-vetted JavaScript developers at the push of a buttton.

Tired of interviewing candidates to find the best developers?

Hire top vetted developers within 4 days.

Leading enterprises, startups, and more have trusted Turing

Check out more interview questions

Hire remote developers

Tell us the skills you need and we'll find the best developer for you in days, not weeks.